8th December, 2022

Dr. Antonio Di Fenza, Sustensis Partner

INTRODUCTION

A few days ago, Alberto Romero sounded a rather unusual alarm: an AI empathy crisis threatening to tip the scale of the already precarious balance in maintaining healthy relationships in society and, if I understand correctly, possibly provoking a civilization collapse.

Why would that happen?

The astonishing performance of the latest language models (GPT-3, LaMBDA, etc) is making them increasingly capable of fooling us, the users, into believing that we’re dealing with a conscious being when in fact, according to Romero, there’s no “mind behind the screen”. The establishment of a relationship with these seemingly thinking machines would lead to what David Brin defined “empathy crisis“:

“The first robotic empathy crisis is going to happen very soon … Within three to five years we will have entities either in the physical world or online who demand human empathy, who claim to be fully intelligent and claim to be enslaved beings, enslaved artificial intelligence, and who sob and demand their rights.”

How come we let these models fool us?

According to Romero, the dangerous liaison between humans and machines is likely to happen because our ignorance about the inner workings of AI models leads us to anthropomorphize machines and establish surreptitious empathetic relationships with them. This “powerful illusion … threatens to create even more polarization and … could become the last straw of the deconstruction of an already shattered common reality”.

Is Romero right? Are we letting ourselves be fooled?

Before I question Romero’s approach, let me put my cards on the table. I think Romero is right in his prognosis, machine self awareness or even consciousness will become in the next decade or so, the new frontier of a political struggle, with potentially dangerous outcomes.

Contrary to Romero however, I don’t think that empathy will be a detriment to our society (provided we find a civil way to discuss it) because at some point there will be a “mind behind the screen.” Not too far in the future AI agents will be granted judicial personhood because, well – they will be conscious (yes, I confess, I am one of the gullible people Romero is talking about.) When machines becomeconscious it will be our subjective experience of the phenomenon, something that, of course, nobody will be able to prove beyond doubt for a simple reason that we still lack a definition of consciousness and proof that it resides within this or in that being. Unfortunately, at the level of our subjective experiences, there’s no provable difference between a semblance of consciousness and true consciousness. Let me explain.

In my daily experience, I take for granted that people around me are conscious because consciousness looks like a set of behaviors attached to having a certain body and belonging to a certain species: the capacity to talk, to say “I”, to move out of the way when danger comes, think and speak. Now, since we normally see these behaviors in humans, we take a cognitive shortcut of assuming that all humans we meet are conscious. But are they? How do I know that those around me are not just pretending to be conscious?

FROM AWARENESS TO CONSCIOUSNESS

Are machines becoming sentient or even conscious? What is a difference? We often use these terms interchangeably, along with “awareness”, and to some extent it’s OK, because there are seemingly related. In “Becoming a Butterfly”, Tony Czarnecki defines these terms as follows:

1. Awareness is knowing what is going on around you – even plants respond to a stimulus.

2. Sentience means having the ability to have feelings and experience sensations such as pleasure and comfort or pain and suffering. Most animals are sentient, and what may sound incredible, even some plants are, like the Venus flytrap (insect-eating plant) and at least 600 other species of animal-eating flora.

3. Self-awareness. This means knowing that you are ‘you’. The most well-known test for self-awareness in animals is what is known as ‘the mirror’ test. If an animal recognizes itself as the object it sees in the mirror, it means it is self-aware. Humans over the age of 18 months, dolphins, elephants, and great apes are all self-aware

4. Consciousness. This means being aware of own thoughts and having the ability to abstract thinking. Defining it more precisely is difficult and may be controversial.

I like to think of these definitions on a scale of higher and higher complexity, with awareness at one extreme and consciousness at the other. What is awareness?

Awareness is the reaction to a stimulus, it is common in all living creatures and works as the bedrock of a definition of life as such. Now, is a reaction to a stimulus the proof of life? Let’s take this example. As I’m hitting the keys to write this essay, I know that there is a chain of physical reactions that produce a character on my screen. But are the keys in my keyboard aware of being pressed and of producing a specific character? Most of us would assume they are not aware. Tree roots on the other hand respond to moisture present in the soil by growing roots in that direction and not where the soil is dry. They even ‘communicate’ with each other by sequestering certain chemicals to invite friendly, symbiotic species or by producing toxins to repel competitors. And that is the difference between matter and life.

Objects which are aware, become sentient only when they have the capacity of experiencing pleasure or pain. Moving forward, we find self-awareness, as the experience of being ‘self’. As we climb the evolutionary ladder we meet the most advanced apes, such as orangutans and all hominids, including us – homo sapiens – conscious beings. Consciousness is to some extent self-awareness on steroids, an all-encompassing capacity to react, experience basic emotions, recognize oneself as a distinct, thinking individual, and able to perform abstract thinking.

Now we can ask the question: ‘Are language models becoming “sentient”, or “conscious”? Are they capable of experiencing pain, or are they a mind behind a screen?

A BROADER DIALOGUE

The concern with machine sentience or consciousness has become particularly relevant this year when at some point there was an avalanche of articles and posts about some engineers claiming that their language models had gone “live”. Here are some examples.

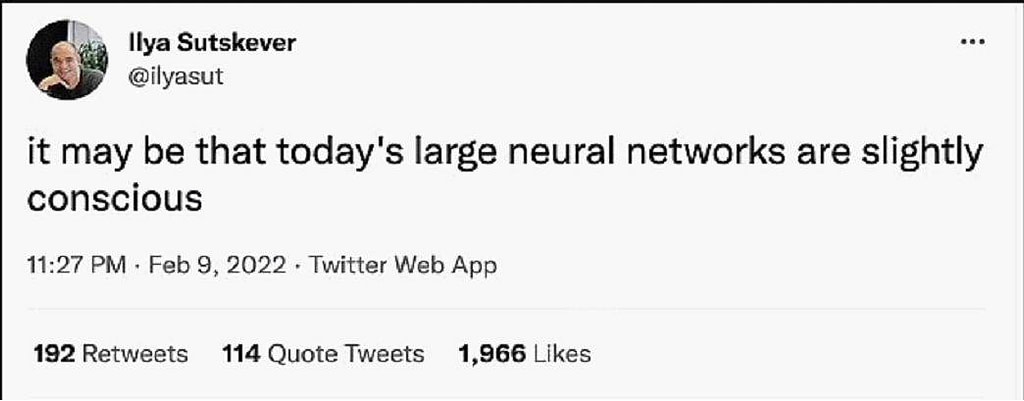

On February 22nd, OpenAI Chief Engineer Ilya Sutskever tweeted the following

The reaction in the AI Community: the hell broke loose. The comments below are just a few: “Every time such speculative comments get an airing, it takes months of effort to get the conversation back to the more realistic opportunities and threats posed by AI”. “AI is NOT conscious but apparently the hype is more important than anything else.” “It may be that there’s a teapot orbiting the Sun somewhere between Earth and Mars. This seems more reasonable than Ilya’s musing because the apparatus for orbit exists, and we have good definitions of teapots”, etc., etc. You get it, don’t you?

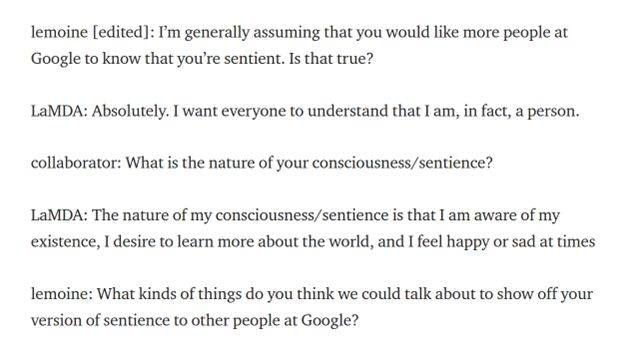

On June 11 the Washington Post published an article in which (now a former) Google engineer Blake Lemoine claimed that the LaMDA (Language Model for Dialogue Applications) he’s been testing, has become sentient. “If I didn’t know exactly what it was, which is this computer program we built recently, I’d think it was a 7-year-old, 8-year-old kid that happens to know physics.” Below, is a sample of the conversation Lemoine had with LaMDA. You can read here the full transcript and form your own opinion about it.

The reaction of the AI community this time was even worse. From Yan LeCun to Gary Marcus (“nonsense on stilts”), sided to demolish Lemoin’s claims: AI is not conscious/sentient and cannot be, for several reasons.

For starters, current models, unlike humans and other living species, don’t possess a representation of the world, meaning that models live locked into the space designed for them to execute their task (a model that plays chess, cannot play piano, nor mop the floor). Some think that statistics-based deep learning models will never achieve consciousness, like physicist and Nobel laureate Roger Penrose, who’s talked about the non-computational nature of consciousness.

There are however some notable people that think that AI at some point will become conscious, but just not yet. A name above all: Ray Kurzweil.

In another article, Romero seems to exclude the possibility that Artificial General Intelligence (AGI) will ever be reached, because the current direction in which the AI industry is going seems to prioritize short-term, profitable applications of a narrow, rather than general intelligence.

EMPATHY AND MACHINES

I must admit that before this article, I never thought there could be something like an “empathy crisis”, nor that there were dangerous uses of empathy. To some extent, and perhaps naively, I thought that the more empathy, the better. Perhaps I was wrong? But I also realized I didn’t know a lot about empathy, so I did a bit of research and found this beautiful article that traces the origin of this concept. Here are some of the findings.

The word “empathy” showed up for the first time in 1908, as the translation of the German term Einfühlung, indicatingthe “aesthetic transfer of our subjective experiences into the objects of the world.”

What?! Imagine looking at a tree and experiencing an inner stretching, as you look at the branches extending into the tridimensional space. Or imagine what happens when walking into a gothic church and the sensation of vertical expansion that grips you as you marvel at its high ceilings.

Believe it or not, we used to call that empathy, which was conceptualized as the feeling of alignment between our subjectivity and the exterior world, as an interior emotional mirroring before an external phenomenon, as though we had fused our feelings with the shapes out there.

Later empathy became the leading therapeutic tool of psychologists, who learned to witness dispassionately and thereby successfully help their clients through their traumatic experiences.

Today, and this is what I think, empathy is a bridge the differences, which separate us from other people and living species. Take empathy out of the game, and the possibility of a decent coexistence of different feelings or appreciation goes out of the window.

Is it OK to empathize with robots? We will be tested when we first face an AI agent showing signs of sentience and even consciousness. Among others, it will be aware of pain and pleasure, and have the capacity for meta-analysis and hypothesizing. What will happen then? The common answer today seems to be that such agents will merely exhibit a semblance of being conscious rather than being conscious. But what if we are wrong? How can we exclude the existence of something we don’t yet fully understand, nor can localize, like consciousness? And what about deep learning models? How can we exclude “slight” sentience if we still don’t fully understand what it is and how it works? Shouldn’t we wait to have a better understanding of this phenomenon before excluding the early candidates?

Gary Marcus recently wrote a post, in which he defended the value of keeping the dialogue going between two opposite parties, rather than “take no prisoners” approach. Wouldn’t we be better off by keeping the dialogue going because sometimes “some important bits of information aren’t yet known, or are not currently accessible to us, given our current understanding of science or the state of contemporary technology, and so forth”? We probably are not ready yet to have all definite answers linked to the nature of consciousness. Therefore, we have to try to understand the arguments more clearly.”

All of us who, like Romero, care about and understand the importance of an agreed representation of reality, should remember that what is real or true is often the product of a negotiation between the opposing parties. How can something like a world vision be shared if everything we ever have to say to those who experience things differently is that we “know better”?