Tony Czarnecki, Managing Partner, Sustensis

London 3rd July 2023

I have recently attended a panel debate at the Chatham House partially inspired by Tony’s Blair’s Institute on Global Governance. He presented a case for Britain to play a major role in governing AI, although his own Institute provides in its key policy document “A New National Purpose” a rather dim view on the current state of AI in the UK. For example, the UK contributes only 1.3% of the aggregate computing power of the Top 500 supercomputers, less than Finland and Italy, and was the only leading nation to record a decline in AI publications last year.

A significant part of the debate at the Chatham House focused on the forthcoming global AI summit to be held in London this autumn. While there are only a few details about the conference agenda, Prime Minister Rishi Sunak announced in early June, after a meeting with President Biden, that Britain aims to play a global role in AI to ensure its immense benefits while prioritizing safety.

For Rishi Sunak, establishing the most powerful AI agency in London could be one of the milestones in restoring Britain’s position as a truly global power after Brexit. However, the challenge lies in the fact that there already exists an agency in Paris responsible for global AI regulation called the Global AI Partnership (GPAI), with 46 countries, including the US, the UK, and the EU. Therefore, the need to create yet another global AI agency is not immediately apparent. So, what role could Britain play in delivering safe AI?

In my recently published book, “Prevail or Fail – A Civilizational Shift to the World of Transhumans,” I argue that there is a need to establish not just one, but two new global agencies. These agencies would cover a more difficult and important area of delivering ‘safe AI’ than GPAI, as AI poses not only a risk like nuclear or avionics industries but also an existential risk that could lead to the extinction of the human species. However, there is little agreement among AI scientists and researchers regarding whether AI indeed poses an existential threat. This disagreement was evident in the recent Munk Debate between two Turing Award winners, who held opposing views on whether AI is an existential threat to humans. A warning letter published by the Future of Humanity Institute in May, signed by thousands of prominent AI researchers and scientists, stated that AI is indeed an existential threat for humanity.

The fundamental disagreement on whether AI poses an existential threat stems from two reasons: commercial interests and differences in the perception of what AI truly is. The first reason, commercial interests, is obvious, as tighter regulation may significantly reduce profits. The second reason is that AI is a relatively new field of science and technology, which only gained prominence in this century. Consequently, some fundamental concepts in AI are still ill-defined. For instance, there is little agreement among AI scientists on the current stage of AI development. Is it still Artificial Narrow Intelligence (ANI), which only surpasses human intelligence in a specific area, or has it progressed to Artificial General Intelligence (AGI), which is far more intelligent than any human?

There is also no consensus on when AGI or its ultimate version, Superintelligence, will emerge. The release of ChatGPT on November 30th, 2022, for example, came as a shock to both users and developers. AI scientists and developers were not expecting such an advanced (near AGI) system for at least another 20 years. This surprise development has caused panic and led to an impromptu meeting between Biden and Sunak.

Since governments have been informed that AGI is still decades away and not an existential threat, it’s not surprising that they view AI as merely a new technological invention—a highly advanced tool. However, AI is not solely a tool; AI represents a new form of intelligence that will soon surpass our own capabilities. This is why my book addresses the importance of distinguishing between AI regulation and AI development control.

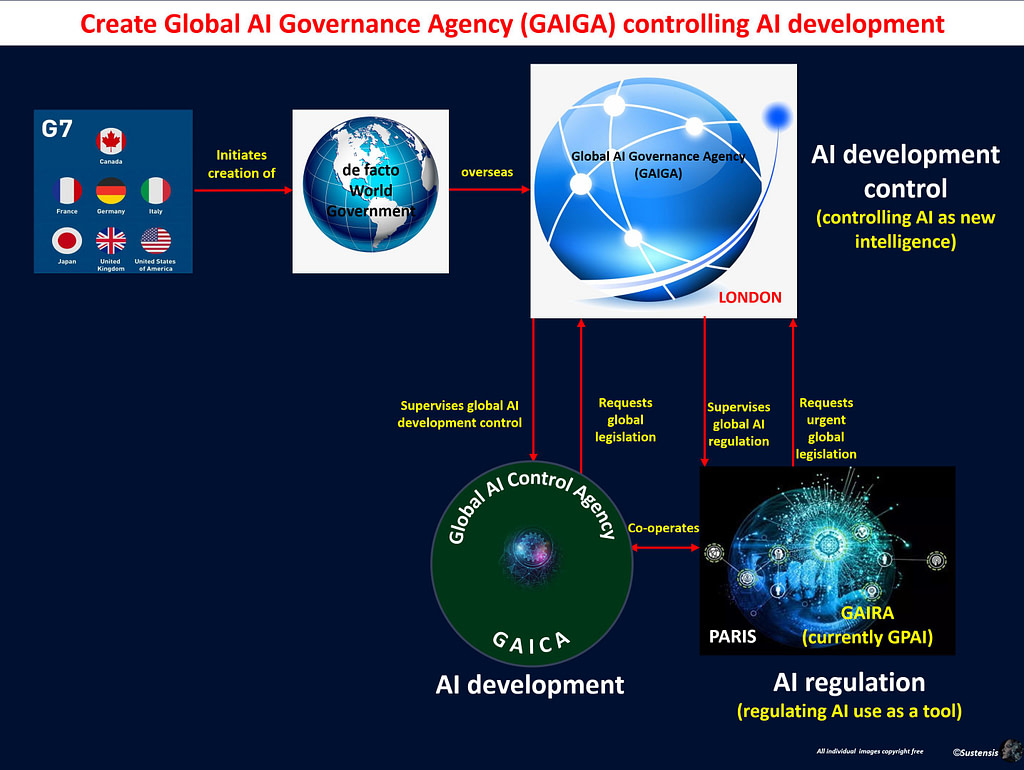

AI regulation pertains to legislation ensuring the safe use of AI products and services, similar to regulations in the aviation or pharmaceutical industries. Without effective AI regulation, there is a risk of AI distorting politics and contributing to a global disorder. While this is a risk, it is not an existential one. Governments should primarily be responsible for mitigating this risk, and that’s where the Global Partnership on Artificial Intelligence (GPAI) comes into play. Established by the G7 Group in 2021, GPAI operates from OECD offices in Paris and is currently the only global AI regulator. In my book, I propose transforming GPAI into the Global AI Regulation Agency (GAIRA) since its scope needs to be expanded.

However, since AI is fundamentally an intelligence that competes with humans, we must minimize the risk of AI spiralling out of human control before it is adequately imbued with human values and preferences. In such a scenario, AI could become a malicious entity posing an existential threat. This is the aspect that is currently lacking an agency responsible for AI development control. Such control should be entrusted to the AI sector itself due to the exponential self-improvement capabilities of AI, which could drastically change its risk level within hours. Governmental institutions would be unable to promptly react and enact legislative changes. This can only occur once the necessary control mechanisms have been implemented, such as temporarily disabling the Internet or mobile networks.

Currently, there is no global oversight over the development of advanced AI or the assessment of potential risks associated with new AI versions—such as humanoid robots with far superior intelligence compared to ChatGPT, like the upcoming Ameca or Optimus models. Already, there are approximately 100 ChatGPT-like assistants, some even more powerful than the original. In a few years’ time, when they evolve into AGIs, each of them will pose an existential risk to humanity, much like biological laboratories developing new viruses. Attempting to control such an explosion of AI would be impossible. The only way to minimize this risk is by developing a single Superintelligence in a global AI Development Centre under the control of a new agency. Given that two-thirds of global AI research and development occurs in the US, it is logical for such an advanced AI Development Centre to be located there. The new agency would act as its immediate controller, capable of rapid response if anything goes wrong. Governments, which tend to change procedures at a linear pace at best, cannot manage this type of control effectively.

A potential solution to establish this new agency for AI development control could be the conversion of the existing US Partnership on AI (PAI) into an independent Consortium—a self-regulating body for the AI sector, which I refer to as the Global AI Control Agency (GAICA). The key argument for transforming it into an independent Consortium is the success of the Internet’s W3C Consortium, founded in 1994 and led by Tim Berners-Lee. Over nearly 30 years, it has become one of the most impactful and influential organizations, with around 450 members despite its minimal budget.

GAICA’s primary role would involve integrating all advanced AI resources to ensure seamless and effective control over AI development, capable of instant response in cases of AI emergencies, such as an attempt by AI to escape human control. While GAICA might be registered in the US as an independent organization, it would be supervised by an international body.

Therefore, in addition to AI regulation (GAIRA) and AI development control (GAICA), there is a crucial need for a third agency focused on global AI governance. I propose naming this agency the Global AI Governance Agency (GAIGA). Its primary role would be to a global supervision and coordination of the operations of GAIRA and GAICA. However, GAIGA would also have additional responsibilities related to managing the transition to a time when we coexist with significantly more intelligent AGI and, eventually, Superintelligence.

Some of the legislative reforms proposed by GAIGA may even require constitutional changes. These changes may not directly relate to the emergence of Artificial General Intelligence (AGI) but rather to the necessary shift towards a new type of civilization. Consequently, GAIGA may soon evolve into an organization with significant global legislative powers to ensure human control over AI, even after it becomes AGI. Achieving this goal would necessitate the involvement of internationally experienced legal organizations, many of which are based in London. From this perspective alone, London seems to be the ideal location for the operation of GAIGA. Additionally, London has been a prominent hub for AI research, with the London-based DeepMind, now part of Google, leading the field together with OpenAI, the creators of ChatGPT. Furthermore, Britain has four universities—Oxford, Cambridge, Imperial College, and UCL—among the top ten universities worldwide. It is also the second powerbase, after the USA, in the fields of medicine, chemistry, and particle physics.

Therefore, the AI summit in London should result in the creation of three agencies that broadly cover the scope of GAIGA, GAICA, and GAIRA. Given the urgency of the situation, it is essential to prioritize improvisation rather than striving for a perfect design. These organizations should be operational right now, as any delay could prove detrimental. This means that currently, we may not even have a de facto World Government, as depicted in the diagram below, with the G7 Group supervising these agencies in the interim.

However, once AGI emerges, the role of an agency like GAIGA will become significantly more significant, necessitating far-reaching changes in international legislation. Unfortunately, achieving a World Government in the foreseeable future is impossible. Many still hold onto the hope that the United Nations (UN) can be transformed into such an organization. The World Federalist Movement (WFM), which was established shortly after the founding of the UN and is still affiliated with it, aimed to achieve this noble objective. Imagine what the world would look like today if the WFM’s goal had been to create a World Government based on a “coalition of the willing,” involving many but not all countries. Such a de facto World Government would be the only realistic option today. But how can we achieve it, and what role might Britain play in its creation?

When I was writing my book, ‘Democracy for a Human Federation,’ in 2018, a federated European Union seemed to be the most suitable candidate for gradually transforming into a de facto World Government. However, due to the pandemic and the Ukrainian war, another organization emerged that hadn’t even been considered at the time—the G7 Group. Comprising Canada, France, Germany, Italy, Japan, the United Kingdom, and the United States, the G7 Group represents a combined 30.7% of the world’s GDP and might become an initiator for the creation of a de facto World Gvernment.

Now, due to the war in Ukraine, we have another option. In October 2022, the European Political Community (EPC) was created. Although its objectives are not well-defined yet, it may provide a more feasible and rapid path towards establishing a de facto World Government. The advantage of this option is that it would involve a relatively shallow federation, where shared economic policies and a common currency would be of lesser significance. However, the challenge lies in establishing a common platform for countries willing to join the federation. This platform must be built upon Universal Values of Humanity and common democratic principles. With 47 countries involved, including Turkey, Azerbaijan, Armenia, among others, achieving consensus may prove to be a formidable task unless decisions are made using a double majority voting system, similar to that employed in the EU.

From 1st January, 2024, Britain will hold the six-month rotating presidency of the EPC. This may be the moment for Britain to shine and fulfil Churchill’s dream of establishing the United States of Europe, quickly expanding it into a de facto World Government.

I have only outlined the potential responsibilities of GAIGA. Given the wide range of its duties, it may quickly evolve into an organization resembling a technocratic government. However, its emergence would be driven by different factors. Technocratic governments are typically formed when no party can create a governing coalition, resulting in the election of technocrats, often specialists or scientists. Italy’s recent example under the premiership of Mario Draghi serves as a case in point. GAIGA, on the other hand, could become a technocratic government because politicians may simply not be able even to understand some of the reasons behind the necessary decisions put forward by AGI and soon after, by Superintelligence.. If GAIGA is established based on the principles proposed here, it may assume the role of the world’s technocratic government by around 2030.

In summary, we must proceed vigorously with legislative regulation of AI development and its use for two reasons. First, regulating the use of AI products and services and controlling AI’s development may delay the loss of total control over AI. Second, such legislation may help mitigate the potential Global Disorder in this decade resulting from an almost complete lack of preparedness for the unprecedented transition to a world of unimaginable abundance and unknowns. The global AI Summit in London presents an exceptional opportunity for Britain to lead the world through this perilous period