Tony Czarnecki, Managing Partner, Sustensis

(This article was orgiginally published on Medium as ‘Don’t panic – we can keep AI genie in the bottle’.)

There was an important mini summit at the beginning of June between President Joe Biden and the British Prime Minister Rishi Sunak. The subject was AI as a potentially existential threat for humans. That was different from a preferred optimistic talk by politicians about incredible benefits, which AI can deliver. The current generation of AI is called Artificial Narrow Intelligence (ANI) which is far superior to humans but only in one area, such as multilingual translation. However, quite soon a new type of AI will emerge – Artificial General Intelligence (AGI), which may indeed present an existential threat for humans. Both statesmen agreed that we need an immediate global intervention to assure that AI matures into humans’ friend when it becomes AGI, rather than our greatest enemy. There is still no precise agreement on what AGI is, but this broad definition may be sufficient:

The most heated debate among AI researchers and developers is about the time when AGI may emerge. Last year, the most common date was 2050. After the release of ChatGPT, the average date given is 2030, although there are good reasons suggesting it may arrive even earlier. That shifting of the AGI emergence date has caused the current panic among politicians, completely unprepared neither for much earlier time when it will coexist with us, and even less so for the existential risk that it represents.

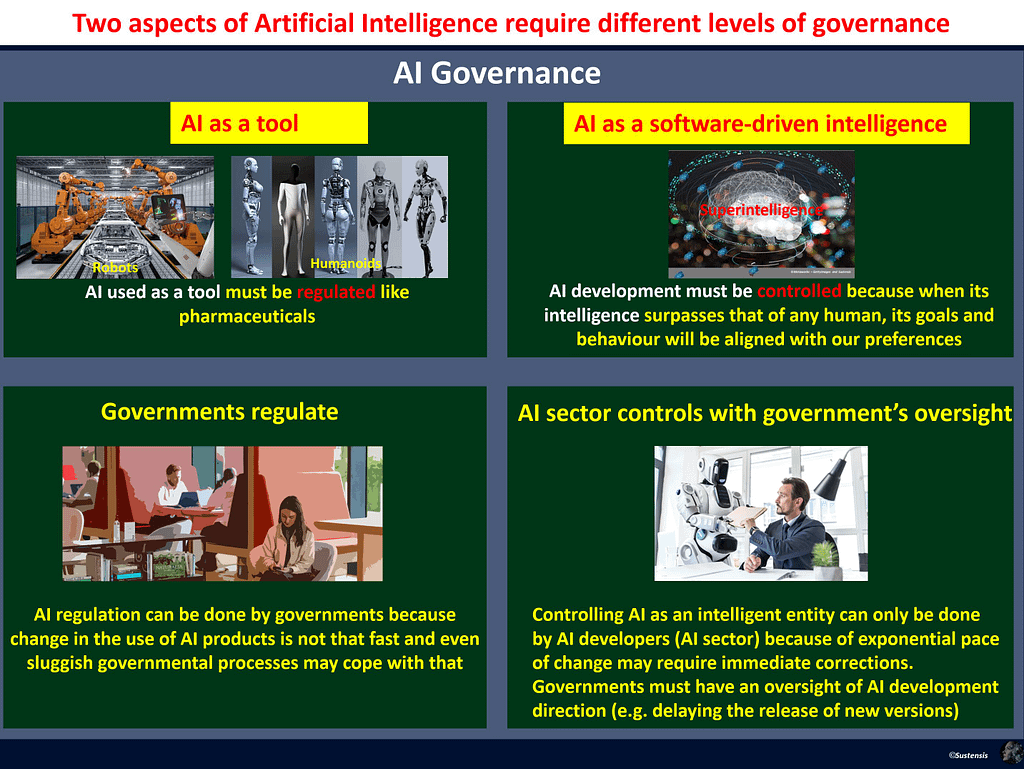

To mitigate that risk, the strengthening of the current regulations, such as the soon to be ratified EU Artificial Intelligence Act, is being proposed. But AI regulation as it is understood and practiced today, will have negligible effect on mitigating the existential risk of AI. Politicians must consider that there is a clear distinction between AI regulation, which is about how the AI products are used, and AI development control, necessary to avoid AI escaping human supervision and thus becoming an existential threat.Both are needed and are part of an overall AI governance, but they require different procedures and have different impact on the future of humans.

To understand the problem, it may help to see two aspects of AI. Currently, it is being used mainly as the most sophisticated tool, capable of solving many difficult problems. That area has been regulated by governments, and it should remain so. The inappropriate use of AI’s products or services may, for example, distort politics and contribute to a Global Disorder but it is not a direct existential threat. Therefore, it should be largely left to governments to regulate it since the pace of change in the use of AI products is relatively slow, compared with the rapid pace at which new generations of AI are released. Although stretched, current regulatory processes, if accelerated, may be capable of implementing the required policy changes on time.

However, AI is also a new type of softer-driven intelligence, which will soon be far superior to humans. Therefore, key AI developers need to control the new AI capabilities, such as whether the goals, which it can set itself, and human values and preferences it should follow have been properly specified and coded. That needs to be tested daily. If the AI goals or values are wrongly specified, then such an AI, which is already capable of writing its own computer code, may escape from human control. If such an escape happens when AI has already become AGI, it may not be possible to get it back under human control.

Therefore, the most advanced AI development should be controlled directly by those who develop it, i.e., the AI sector itself because of exponential improvement in the AI’s capabilities, which may require almost instantaneous correction to maintain continuous supervision of AI’s goals and behaviour. But how might such a control be implemented?

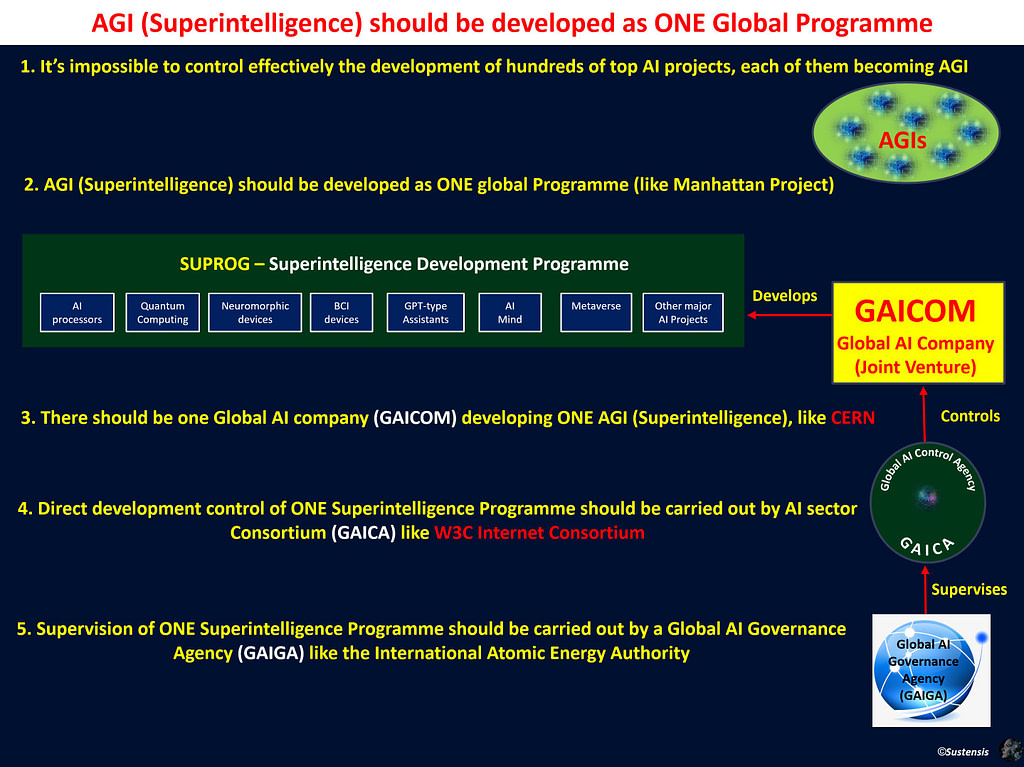

The problem becomes more complicated when we look how the future AGI is being developed. There are now about 1,000 companies and AI research organizations in the world specializing in delivering most advanced AI solutions. Their number is growing daily. Each of these companies will continuously improve their flagship AI agent. Quite soon, mainly through the process of self-learning, one of these companies will develop the first AGI. Even if the remaining 999 companies‘ AI do not reach the AGI level, it will be a matter of weeks when each of them will have their own AGI. This is what happened after the release of ChatGPT, when a few weeks later, about 40 companies had a similar product, of which Antropic’s Claude is now far more powerful than the most recent version of ChatGPT/ GPT-4. Each of these hundreds of competing AGIs will become a potential existential risk for humans. Controlling so many AGIs is simply impossible – see p. 1 in the diagram below.

In my recently published book: ‘Prevail or Fail – a Civilisational Shift to the World of Transhumans’ https://www.amazon.co.uk/dp/B0C51RLW9B, I describe how it could be done. Here, I only introduce some components, which directly affect the AI sector’ role in controlling the process of the most advanced AI.

It seems that the only way to control AI effectively is to develop just one global AI, the future Superintelligence. To do that, we need to consolidate all advanced AI research projects, resources, and development facilities into one large Superintelligence Development Programme (SUPROG) – p. 2 in the diagram. It would consist of hundreds of most advanced projects such as brain mapping, quantum computing or most advanced hardware devices such as neuromorphic neurons or digital chips. I need to clarify the difference between Artificial General Intelligence (AGI) and Superintelligence. So, AGI, as mentioned earlier, is a self-learning intelligence capable of solving any task better than any human. But Superintelligence is a single networked, self-organizing entity, with its own mind and goals exceeding all human intelligence. The first breakthrough will happen when AI reaches human level intelligence and becomes AGI. Such AGI could be even embedded into a humanoid robot, such as Optimus

Superintelligence will have to be developed by one Global AI Company (GAICOM), as a Joint Venture company, see p. 3 in the diagram. The reason for such a centralization of development of the most advanced AI capabilities is that it is the only way to deliver an effective control of hundreds of companies developing advanced AI systems. It could be compared to international organizations, such as CERN or TOKOMAK, but its role would be far greater, since it would have to ensure that the control of human’s future remains in our hands.

Since nearly 70% of the AI sector is located in the USA the development centre of Superintelligence should be located there. The US has already been providing significant organisational support for the AI sector, even under Donald Trump’s presidency. That has continued during the President Joe Biden’s term at the White House. The ‘AI summit’ between President Biden and Prime Minister Rishi Sunak is a further confirmation of that trend.

To create GAICOM, companies developing the most advanced AI technologies would be requested to legally split that business from the rest of the company and add it to GAICOM as part of a Joint Venture company. The contributing company would be compensated in GAICOM’s shares, which will not be publicly traded, to eliminate the impact of the market.

GAICOM could be set up on a voluntary basis, but it is more likely that it will have to be created under an international agreement, or initially installed in the US under a Presidential Order’. I recognize that it is a legal and economic conundrum, but we need to remember that we are in a situation similar to a pre-war period, like now the war in Ukraine. The world has to make sacrifices to achieve a common civilisational goal – to control Superintelligence before it starts controlling us.

Perhaps the first two companies, which might contribute some of their AI related assets are Alphabet (Google) and Microsoft. They should separate Google browser from Alphabet, and BING and Edge browsers from Microsoft. These browsers should then be integrated into one browser and Google and Microsoft form the first Joint Venture company of the future GAICOM. That would end, what I call, ‘the war of the browsers’.

GAICOM, and by extension SUPROG must be controlled by the AI sector itself because of the complexities and urgency of the problems, which may occur during the development of Superintelligence. Perhaps the best way to do it is to create a Consortium, similar to the Internet’s (W3C) Consortium founded in 1994 and led by Tim Berners-Lee since its inception. For nearly 30 years it has been one of the most successful organizations in history.

In 2016, several key US organizations, such as Amazon, Facebook, Google, DeepMind, Microsoft, IBM and Apple created the Partnership on AI (PAI), a not-for-profit organization. As of June 2023, PAI has over 120 members from around the world, including tech companies, non-profit organisations, and academic institutions. Therefore, I would propose to convert PAI into a Global AI Control Agency (GAICA) – p. 4 in the diagram. GAICA should be entirely apolitical focusing on the effectiveness of the control process and of would course include representatives from most countries, as does the Internet’s W3C Consortium.

However, if the Superintelligence Programme is to be a global enterprise, it must have a strong international oversight. After all, it will be developing a new intelligence, far superior to humans. Therefore, acknowledging the necessity of direct control over AI development by GAICA as an independent Consortium, it must ultimately be supervised by an international organization. That could be the organisation, which should be created during the Global AI Summit in London this autumn, as proposed during the ‘AI summit’. I call it Global AI Governance Agency (GAIGA) – p. 5 in the diagram. This should be an organisation superior to Global Partnership on AI (GPAI) set up by G7 in 2020 and operated by OECD in Paris, responsible for a global AI regulation, and GAICA – responsible for a global AI development control. GAIGA would also have additional functions related to managing a civilisational shift to the time when we will be coexisting with AGI and later on with Superintelligence.

Should an organisation like GAIGA be created in London this autumn, then it would be a very good news indeed. It would be then possible to setup the Superintelligence Programme from the top, rather than from the bottom, as suggested above. This is another proof how fast the world is changing.

In summary, the release of ChatGPT enabled hundreds of millions of people to have a glimpse of what AI may be about. That in turn has created media frenzy and made politicians aware of incredible benefits AI may deliver but also of existential threat for humanity if it gets out of human control. Despite all the odds, humans may still be able to keep the AI genie in the bottle, at least for much longer than it would have been otherwise the case. That will give us more time for a transition to a new civilisation where we will coexist with Superintelligence. That may happen as early as in the next decade.

Tony Czarnecki is the Managing Partner of Sustensis, a Think Tank on Civilisational Transition to Coexistence with Superintelligence. His latest book: ‘Prevail or Fail – a Civilisational Shift to the World of Transhumans’ is available on Amazon: https://www.amazon.co.uk/dp/B0C51RLW9B.