Four polls conducted in 2012 and 2013 showed that 50% of top AI specialists agreed that the median estimate for the emergence of Superintelligence is between 2040 and 2050. In May 2017, several AI scientists from the Future of Humanity Institute, Oxford University and Yale University published a report “When Will AI Exceed Human Performance? Evidence from AI Experts”, reviewing the opinions of 352 AI experts. Overall, those experts believe there is a 50% chance that Superintelligence (AGI) will occur by 2060.

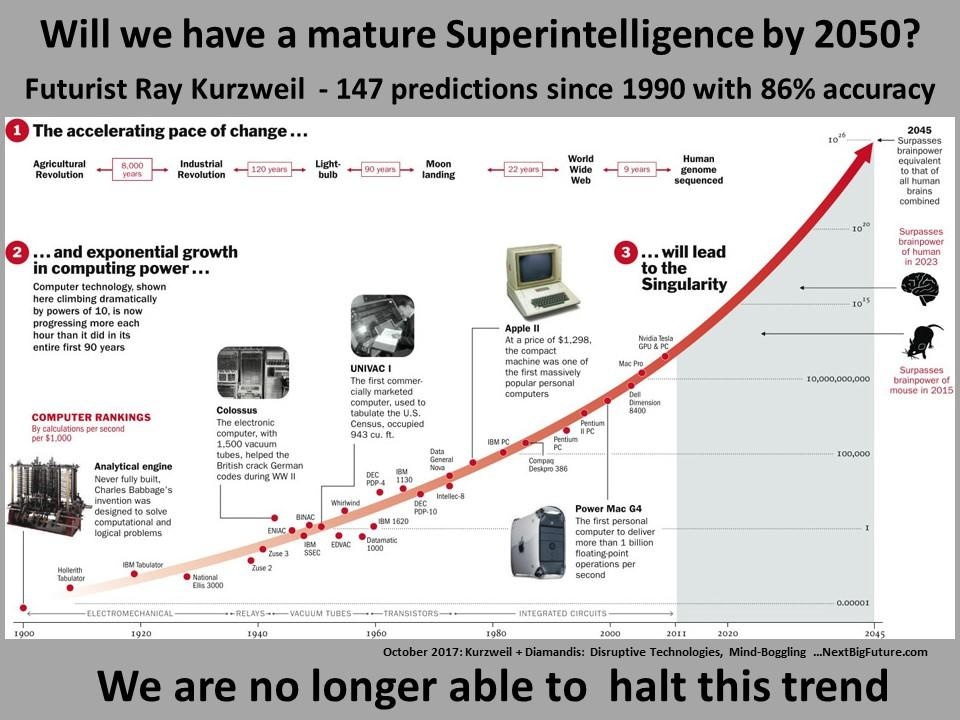

Independently, Ray Kurzweil, probably the best-known futurist and forecaster, whose success rate of his 147 predictions in the 1990s was 80% correct, claims that Superintelligence will emerge earlier, by 2045, as illustrated below.

Even more interesting is the fact that Kurzweil’s predictions have been quite steady over the last 20 years, while other experts’ opinions have changed significantly. Here are some examples:

- In the 1990s Kurzweil predicted AI will achieve human level intelligence in 2029 and Superintelligence in 2045, whereas most AI professionals at that time believed it would happen earliest in the 22nd century. So, the gap between Kurzweil’s and other AI experts on the emergence of Superintelligence was at least 100 years.

- In 2000s, AI experts’ predictions indicated that AGI will most likely be achieved by about 2080 but Kurzweil still maintained 2045 as the most likely date. The gap was 35 years.

- Recently, as in the above Report, 50% of 352 AI experts predict Superintelligence (AGI) is most likely to happen by 2060. Since Kurzweil prediction still stands at 2045, the gap has narrowed to about 15 years.

Additionally, human intelligence will not improve significantly, whereas AI capabilities will improve following the Moore’s law, every 18 months.

There are of course AI researchers that have an almost opposite view, saying that Superintelligence (AGI) will never be built or that it will never surpass humans in all its capabilities, although they agree it could and does already outperform humans in some areas, being completely ignorant in most others. That is, for example, the view of Kevin Kelly, who quotes 5 myths of AI in his article ‘The Myth of a Superhuman AI’ . According to Kelly, these myths are:

- Artificial intelligence is already getting smarter than us, at an exponential rate

- We’ll make AIs into a general-purpose intelligence, like our own

- We can make human intelligence in silicon

- Intelligence can be expanded without limit

- Once we have exploding Superintelligence it can solve most of our problems.

Then he challenges those myths with the following assertions:

- Intelligence is not a single dimension, so “smarter than humans” is a meaningless concept (that to a large extent depends on what we mean by ‘intelligence’ – seen an earlier tab

- Humans do not have general purpose minds, and neither will AI – this is just a linguistic twist

- Emulation of human thinking in other media will be constrained by cost – that is probably the lowest hurdle – energy might be more realistic

- Dimensions of intelligence are not infinite – quite an obscure statement, which tells us nothing to the contrary of the 5 ‘myths’

- Intelligences are only one factor in progress – similar explanation as in 1.

Without going into a detailed discussion, here are some further comments on challenging the myths about AI. It is sufficient to say that his challenges are rather weak. Only the first challenge has some merit. However, it may also be incorrect. I do not think that most AI scientists believe that Superintelligence (AGI) will be a single dimension intelligent agent. A single dimension AGI is simply AI, i.e. artificial intelligence that may be more capable than humans in one particular area, like in a car navigation. However, the pathway to a smarter than human intelligence will lead through the multitude of intelligences all uploaded into one gigantic knowledge silo, which with a suitable set of algorithms will attain multidimensional intelligence millions of times better than that of humans. Observing the progress of AI, it is obvious that machines will surpass human intelligence not at any single point in time, but rather gradually, and in more and more areas of intelligence, until one day they will become immensely more intelligent than humans in all areas. Challenge 2 – I disagree, we have a general purpose intelligence – that’s so obvious. Challenge no. 3 can be dismissed outright – when we have a mature Superintelligence, the cost of most things will be close to zero, apart perhaps of energy. Challenge 4 is irrelevant. Challenge 5 could be true, but it depends what one means by ‘progress’.

Predicting future meaningfully, so that it would motivate us to prepare for it properly, can only be done with allocating certain probabilities, as those 352 AI researchers have done. On the other hand, in 1969, a Russian dissident writer Andrei Amalrik, wrote a book ‘Will the Soviet Union Survive Until 1984?’ with a clear reference to Orwell’s ‘Nineteen-eighty-four’ novel. He did not provide any probability of that happening by 1984. He was wrong by 7 years – the Soviet Union collapsed on 26 December 1991. However, he was not wrong about the trend and the way it may happen.

With Superintelligence we may not know the way in which it may be achieved, but we can clearly see the trend. That allows us to suggest with at least 50% probability that it may happen by 2050.

Tony Czarnecki, Sustensis