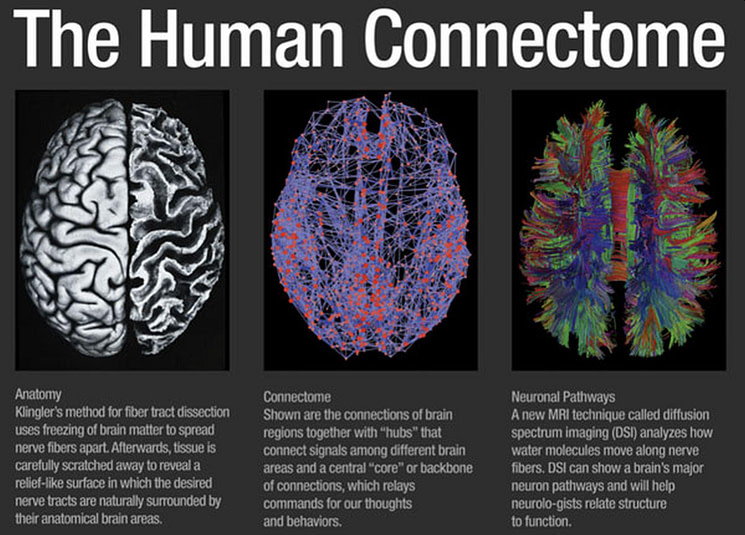

This is quite different from the methods discussed thus far. There, the assumption was that Superintelligence would be delivered from scratch through a series of ever more intelligent AI agents. This method assumes that we start with ‘a system’ that has the “right” motivations and we increase its intelligence from there. The obvious candidate for such ‘a system’ would be a human being (or a group of human beings). Bostrom proposes to scan human brains and then augment their capabilities with AI until such an augmented mind becomes Superintelligence. There are at least two such on-going projects, of which the EU’s Conncectome project, which is to decode all connections between every neuron in a human brain by 2025 is probably the most promising. Such an approach in various forms and degrees is favoured by Transhumanists, who envisage that at some stage human species will merge with Superintelligence.

The next question is should we “allow” the new breed of humanoids to define ethics for themselves or should they be jump-started by our ethics. In my view, we should try as much as possible make a transfer of human ethics into the new species. Therefore, whichever organisation takes over the task of saving Humanity, there is an urgent need to formally agree the renewed set of Universal Values and Universal Rights of Humanity so that the world could reduce the level of existential risks before the AI-based humanoids (Transhumans) adopt them. Beyond that, however, when our human ethics is re-defined at some stage, the values with the corresponding ethics are bound to change. Ethics is not static.

As Bostrom notes in his book, augmentation might look pretty attractive if all other methods turn out to be too difficult to implement. Furthermore, it might end up being a “forced choice”. If augmentation is the only route to Superintelligence, then augmentation is, by default, the only available method of motivation selection. Otherwise, if the route to Superintelligence is via the development of AI, augmentation is not on the cards.

But a “solution” to the control problem by augmentation is not perfect either. If the system we augment has some inherent biases or flaws, we may simply end up exaggerating those flaws through a series of augments. It might be wonderful to augment a Florence Nightingale to Superintelligence, but it might be nightmarish to do the same with a Hitler. Furthermore, even if the starter-system is benevolent and non-threatening, the process of augmentation could have a corrupting effect.

Applying redefined Values of Humanity to Superintelligence

It is clear from the last paragraph in the preceding section that the least risky strategy, for delivering Superintelligence would be the process of augmentation (although it may also have some inherent dangers. Apart from enormous technical problems that will emerge, the equally important issue will be the kind of values the new augmented species should have, which would become more than just a digital Superintelligence. That’s why the need to define top values of Humanity, the foundation of human ethics, is so important.

These values will constitute the new Human Values Charter. We will then need to establish certain procedures, perhaps enshrined in laws regarding the transfer of these values into various shapes and types of AI robots and humanoids. This would create a kind of a framework where the Human Values Charter becomes the core of every AI agent’s ‘brain’. Such framework would be a boundary beyond which no AI agent could act and implemented using certain guidelines, such as 23 Asilomar principles mentioned earlier. Only then could the developers define specific goals and targets for such AI agents always referencing the Human Values Charter as constraints to agents’ objectives. In practical terms the best way forward could be to embed the Human Values Charter into a sealed chip that cannot be tampered, perhaps using quantum encryption, and implant it into any intelligent AI agent. Such procedure could be monitored by an independent global organization, which would manufacture and distribute those chips and licence the agents before they can enter public space. But even if such a chip is developed, there could still be a danger that confusion might arise from misinterpretation of what is expected from Superintelligence.

There are a number of proposals on how to ensure that Superintelligence acquires from humans only those values that we want. Nick Bostrom mentions them in his book “Superintelligence: Paths, Dangers, Strategies”, especially in the chapter on ‘Acquiring Values’, where he has developed quite a complex theory on the very process of acquiring values by Superintelligence.

The techniques specified by him aim to ensure the true representation of what we want. They are very helpful indeed, but as Bostrom himself acknowledges, it does not resolve the problem of how we ourselves interpret those values. And I am not talking just about agreeing the Charter of Human Values by Humanity, but rather expressing those values in such a way that they have a unique, unambiguous meaning. That is the well-known issue of “Do as I say”, since quite often it is not exactly what we really mean. Humans communicate not just using words but also symbols and quite often additionally re-enforce it with body language to avoid the misinterpretation where double meaning of words is possible. Would it be possible to communicate with Superintelligence using body language in both directions? This is a well-known issue when writing emails. To avoid misinterpretation by relying on the meaning of words alone, we use emoticons.

How then would we further minimize misunderstanding? One possibility would be, as John Rawls, writes in his book “A Theory of Justice” to create algorithms, which would include statements like this:

- do what we would have told you to do if we knew everything you knew

- do what we would’ve told you to do if we thought as fast as you did and could consider many more possible lines of moral argument

- do what we would tell you to do if we had your ability to reflect on and modify ourselves

We may also envisage within the next 20 years a scenario where the Superintelligence is “consulted”, on which values to adapt and why. There could be two options applied here (if we humans have still an ultimate control):

- In the first one the Superintelligence would work closely with Humanity and essentially would be under the total control of humans

- The second option, and I am afraid more likely, assumes that once Superintelligence achieves the benevolent Technological Singularity stage then it will probably be much cleverer than any human being in any aspect of human thinking or abilities. At such a moment in time, it will increase its intelligence exponentially, and in a few weeks, it would be millions of times more intelligent than any human being, creating a Technological Singularity event. Even if it is benevolent and has no ulterior motives, it may see that our thinking is constrained or far inferior to what it knows and how it sees what is ‘good’ for humans.

Therefore, it could over-rule humans anyway, for ‘our own benefit’, like a parent which sees that what a child wants is not good for it in the longer term because it simply cannot comprehend all the consequences and implications of agreeing to what a child wants. The question remains how Superintelligence would deal with values that are strongly correlated with our feelings and emotions such as love or sorrow. In the end, emotions make us predominantly human and they are quite often dictating us solutions that are utterly irrational. What would Superintelligence choice would be, if it based its decisions on rational arguments only? And what would it be if it also included, if possible, emotional aspects of human activity, which after all, makes us more human but less efficient and from the evolutionary perspective more vulnerable and less adaptable?

The way Superintelligence behaves and how it treats us will largely depend on whether at the Singularity point it will have at least basic consciousness. My own feeling is that if a digital consciousness is at all possible, it may arrive before the Singularity event. In such case, one of the mitigating solutions might be, assuming all the time that Superintelligence will from the very beginning act benevolently on behalf of Humanity, that decisions it would propose would include an element of uncertainty by taking into account some emotional and value related aspects.

In the long-term, I think there is a high probability that human race as we know it will evolve becoming a different non-biological species. In a sense, this would mean the extinction of a human species. But why should we be the only species not to become extinct? After all, everything in the universe is subject to the law of evolution. We have evolved from apes and we will evolve into a new species, unless some existential risks will annihilate civilization before then. We can speculate whether there will be augmented humans, synthetic humans, or entirely new humanoids, i.e. mainly digital humans with uploaded human minds or even something entirely different that we cannot yet envisage. It is quite likely, that humans will co-exist with two or even three species for some time but ultimately, we humans in a biological form will be gone at some stage.

This is quite different from the methods discussed thus far. There, the assumption was that Superintelligence would be delivered from scratch through a series of ever more intelligent AI agents. This method assumes that we start with ‘a system’ that has the “right” motivations and we increase its intelligence from there. The obvious candidate for such ‘a system’ would be a human being (or a group of human beings). Bostrom proposes to scan human brains and then augment their capabilities with AI until such an augmented mind becomes Superintelligence. There are at least two such on-going projects, of which the EU’s Conncectome project, which is to decode all connections between every neuron in a human brain by 2025 is probably the most promising. Such an approach in various forms and degrees is favoured by Transhumanists, who envisage that at some stage human species will merge with Superintelligence.

As Bostrom notes in his book, augmentation might look pretty attractive if all other methods turn out to be too difficult to implement. Furthermore, it might end up being a “forced choice”. If augmentation is the only route to Superintelligence, then augmentation is, by default, the only available method of motivation selection. Otherwise, if the route to Superintelligence is via the development of AI, augmentation is not on the cards.

But a “solution” to the control problem by augmentation is not perfect either. If the system we augment has some inherent biases or flaws, we may simply end up exaggerating those flaws through a series of augments. It might be wonderful to augment a Florence Nightingale to Superintelligence, but it might be nightmarish to do the same with a Hitler. Furthermore, even if the starter-system is benevolent and non-threatening, the process of augmentation could have a corrupting effect.

Applying redefined Values of Humanity to Superintelligence

It is clear from the last paragraph in the preceding section that the least risky strategy, for delivering Superintelligence would be the process of augmentation (although it may also have some inherent dangers. Apart from enormous technical problems that will emerge, the equally important issue will be the kind of values the new augmented species should have, which would become more than just a digital Superintelligence. That’s why the need to define top values of Humanity, the foundation of human ethics, is so important.

These values will constitute the new Human Values Charter. We will then need to establish certain procedures, perhaps enshrined in laws regarding the transfer of these values into various shapes and types of AI robots and humanoids. This would create a kind of a framework where the Human Values Charter becomes the core of every AI agent’s ‘brain’. Such framework would be a boundary beyond which no AI agent could act and implemented using certain guidelines, such as 23 Asilomar principles mentioned earlier. Only then could the developers define specific goals and targets for such AI agents always referencing the Human Values Charter as constraints to agents’ objectives. In practical terms the best way forward could be to embed the Human Values Charter into a sealed chip that cannot be tampered, perhaps using quantum encryption, and implant it into any intelligent AI agent. Such procedure could be monitored by an independent global organization, which would manufacture and distribute those chips and licence the agents before they can enter public space. But even if such a chip is developed, there could still be a danger that confusion might arise from misinterpretation of what is expected from Superintelligence.

There are a number of proposals on how to ensure that Superintelligence acquires from humans only those values that we want. Nick Bostrom mentions them in his book “Superintelligence: Paths, Dangers, Strategies”, especially in the chapter on ‘Acquiring Values’, where he has developed quite a complex theory on the very process of acquiring values by Superintelligence.

The techniques specified by him aim to ensure the true representation of what we want. They are very helpful indeed, but as Bostrom himself acknowledges, it does not resolve the problem of how we ourselves interpret those values. And I am not talking just about agreeing the Charter of Human Values by Humanity, but rather expressing those values in such a way that they have a unique, unambiguous meaning. That is the well-known issue of “Do as I say”, since quite often it is not exactly what we really mean. Humans communicate not just using words but also symbols and quite often additionally re-enforce it with body language to avoid the misinterpretation where double meaning of words is possible. Would it be possible to communicate with Superintelligence using body language in both directions? This is a well-known issue when writing emails. To avoid misinterpretation by relying on the meaning of words alone, we use emoticons.

How then would we further minimize misunderstanding? One possibility would be, as John Rawls, writes in his book “A Theory of Justice” to create algorithms, which would include statements like this:

- do what we would have told you to do if we knew everything you knew

- do what we would’ve told you to do if we thought as fast as you did and could consider many more possible lines of moral argument

- do what we would tell you to do if we had your ability to reflect on and modify ourselves

We may also envisage within the next 20 years a scenario where the Superintelligence is “consulted”, on which values to adapt and why. There could be two options applied here (if we humans have still an ultimate control):

- In the first one the Superintelligence would work closely with Humanity and essentially would be under the total control of humans

- The second option, and I am afraid more likely, assumes that once Superintelligence achieves the benevolent Technological Singularity stage then it will probably be much cleverer than any human being in any aspect of human thinking or abilities. At such a moment in time, it will increase its intelligence exponentially, and in a few weeks, it would be millions of times more intelligent than any human being, creating a Technological Singularity event. Even if it is benevolent and has no ulterior motives, it may see that our thinking is constrained or far inferior to what it knows and how it sees what is ‘good’ for humans.

Therefore, it could over-rule humans anyway, for ‘our own benefit’, like a parent which sees that what a child wants is not good for it in the longer term because it simply cannot comprehend all the consequences and implications of agreeing to what a child wants. The question remains how Superintelligence would deal with values that are strongly correlated with our feelings and emotions such as love or sorrow. In the end, emotions make us predominantly human and they are quite often dictating us solutions that are utterly irrational. What would Superintelligence choice would be, if it based its decisions on rational arguments only? And what would it be if it also included, if possible, emotional aspects of human activity, which after all, makes us more human but less efficient and from the evolutionary perspective more vulnerable and less adaptable?

The way Superintelligence behaves and how it treats us will largely depend on whether at the Singularity point it will have at least basic consciousness. My own feeling is that if a digital consciousness is at all possible, it may arrive before the Singularity event. In such case, one of the mitigating solutions might be, assuming all the time that Superintelligence will from the very beginning act benevolently on behalf of Humanity, that decisions it would propose would include an element of uncertainty by taking into account some emotional and value related aspects.

In the long-term, I think there is a high probability that human race as we know it will evolve becoming a different non-biological species. In a sense, this would mean the extinction of a human species. But why should we be the only species not to become extinct? After all, everything in the universe is subject to the law of evolution. We have evolved from apes and we will evolve into a new species, unless some existential risks will annihilate civilization before then. We can speculate whether there will be augmented humans, synthetic humans, or entirely new humanoids, i.e. mainly digital humans with uploaded human minds or even something entirely different that we cannot yet envisage. It is quite likely, that humans will co-exist with two or even three species for some time but ultimately, we humans in a biological form will be gone at some stage.