Many computer scientists think that consciousness involves two stages: 1 – accepting new information, storing and retrieving old information, and 2 – cognitive processing of all that information into perceptions and actions. If that’s right, then one day machines will become conscious. That view has recently gained some significant support. In October 2017 Drs. Stanislas Dehaene, Hakwan Lau and Sid Kouider from Collège de France in Paris came to a conclusion that consciousness is “a multi-layered construct. As they see it, there are two kinds of consciousness, which they call ‘dimensions C1 and C2. Both dimensions are necessary for a conscious mind, but one can exist without the other:

- Subconsiousness – dimension C1, containing information and the huge range of processes with the required algorithms in the brain where most human intelligence lies. That is what enables us to choose a chess move, or spot a face without really knowing how we did it. The researchers believe that this type of consciousness has already been represented in a digital form and is comparable to the kind of processing that lies behind AI algorithms that are embedded in DeepMind’s AlphaGo or Chinese Face++.

- Actual consciousness – dimension C2 containing and monitoring information about oneself, which splits it into two distinct types and which is not yet present in machine learning:

- The ability to maintain a huge range of thoughts at once, all accessible to other parts of the brain, making abilities, like long-term planning, possible. In this area there is already some progress. For example, in 2016, DeepMind developed a deep-learning system that can keep some data on hand for the use by an algorithm when it contemplates its next step. This could be the beginning toward global information availability.

- The ability to obtain and process information about ourselves, which allows us to do things like reflect on mistakes.

This proposition closely correlates well with a theory that Actual Consciousness (C2) is driven by billions of networks that bind together information from Subconsiousness (C1) following stochastic probability similar or identical with the principles of quantum mechanics.

Should those recent findings and proposals from Collège de France in Paris become generally accepted then that might resolve the questions posed by Roger Penrose about the nature of consciousness. He may be right that consciousness is not just the brain-mind construct but is also underpinned by phenomena similar to those present in quantum mechanics, however not in the way he suggests, i.e. in the ‘hardware’ (microtubules). Consciousness might behave in a similar way to some aspects of quantum mechanics’ Uncertainty Principle in that it would not act at the level of individual synapses but rather at the level of neural networks, which connect millions of synapses and give an averaged response at a macro-level, e.g. lifting a hand. Since that response would be only probabilistic and not based on yes-no state of an individual synapse (and hence its similarity to quantum phenomena), it will ensure that there is no conflict with the preservation of free will. That might also be in line with the thinking of people like Raymond Tallis, who so strongly defends the validity of free will (i.e. unpredictability of human actions). And that’s how the scientific world may slowly be arriving at some common understanding on the nature of consciousness, By extension, it may also make more feasible the uploading a human mind together with its consciousness to Superintelligent being.

Let me now summarize the conclusions about the nature of consciousness, as far as science seems to indicate and the consequences for uploading a human mind to Superintelligence. Consciousness is probably one of the very few areas in science where the academics cannot agree on the meaning of the subject they are studying. Probably, and this is still only probably, the human consciousness multi-layered structure and organization has been best summed up in the recent research mentioned above by the academics from Collège de France:

“Consciousness is a structural (functional) organization of a physical system, which operates at two levels: 1. Subconsiousness – accepting, storing and retrieving information using huge range of processes with the required algorithms – this is where most of human intelligence and knowledge lie. 2 – Actual consciousness containing information about oneself, which it turns into a wide range of ‘thoughts’, all accessible at once to all parts of the brain, which it is able to have continually monitored and processed, outputting them as perceptions and actions.”

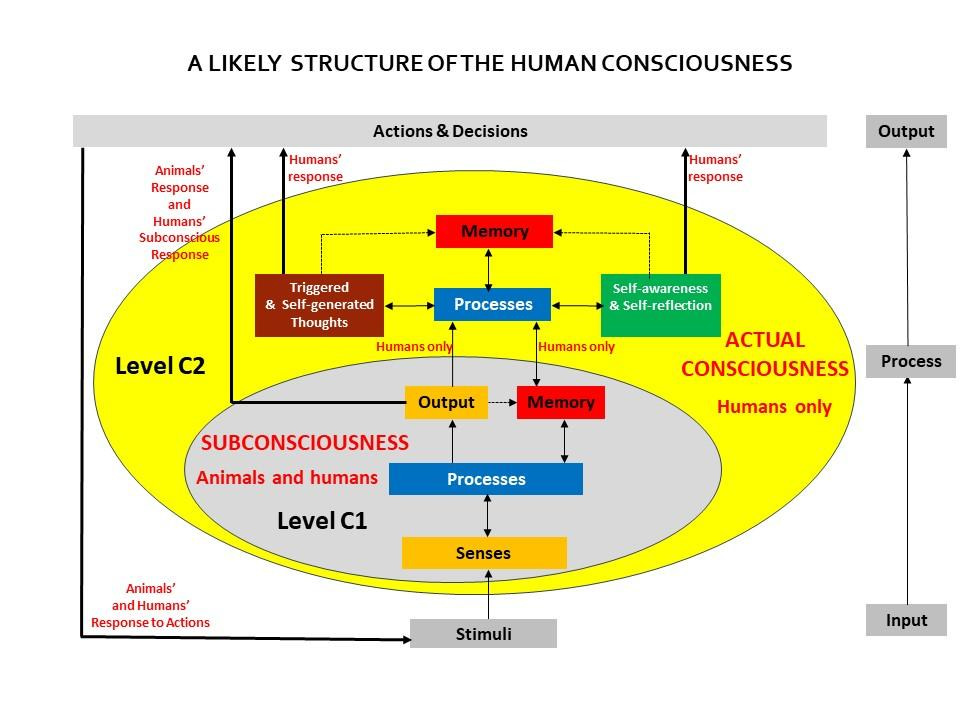

I have converted that definition into a diagram below. Looking from a wider perspective it shows very clearly that consciousness is the processing centre of external stimuli to actions and decisions. It is an ever-present model of reality: Input-Process-Output, applicable to all forms of matter and energy.

A likely organization of the human consciousness as proposed by the researchers at the Collège de France

(Stanislas Dehaene, 2017) – graph by Tony Czarnecki

The second level of consciousness proposed by the scientists from the College de France is only present in humans and to a lesser degree in some mamals. This is a unique type of consciousness, which can gererate stimuli within its own ‘processing centre’, which often leads to multiple loops between an initial self-generated thought compared against the past (the memory) and processed again, until the final decision or action is made. It concurs quite well with a definition of a human consciousness proposed by an American particle physicist Michio Kaku who in his book ‘The future of the Mind’ proposes that: ‘Human consciousness is a specific type of consciousness that continuously evaluates the past to simulate the future to make a decision to achieve a goal‘.

Since we still have at least a decade of full control over the development and the functionality of Superintelligence, we should only create it in such a way that at some stage it will become conscious. If Stanislas Dehaene et al are right about the nature of consciousness, then the final element that we need is to present some supporting evidence how such a process can manifest itself in nature. Fortunately, the most recent research in the nature of consciousness provides some indication that we may be very close to identifying the nature of consciousness, how it emerges and possibly how it might be developed in an inorganic being. I do not want to go into the details of various theories of consciousness but I will only mention very briefly one of the most promising theories, which is supported by some clinical evidence. It is the Conscious Electromagnetic Field Theory (CEMI), proposed mainly by Johnjoe McFadden (I provide a simplified version here).

It proposes that every time a neuron fires to generate an action (through a changed electrical potential in its synapse) that signal is then cascaded down the line to thousands of other neurons. The overall result of that synchronous firing is that it creates an electric current (the source of the firing of a neuron is a biochemical reaction, which in turn generates a micro electric current). Should that current be sufficiently strong, it then becomes the source of a disturbance in the surrounding electromagnetic field. Every time such a set of neurons fires off, it creates the same electromagnetic field. McFadden has proposed that such an electromagnetic field, generated by the brain, creates a representation of the information held in neurons. Since consciousness is information, it is at least plausible that the theory explains the nature of consciousness and the mechanism for the creation of conscious experience by humans or some animals. Studies have already shown that conscious experience correlates not with the number of neurons firing, but with the synchronicity of that firing because only that could create a strong enough electromagnetic field.

However, according to McFadden, such information (knowledge), embedded in a synchronous electromagnetic field is the first level of consciousness, called awareness (Level C1 in Dehaene’s definition of consciousness), which all living creates possess. For awareness to occur, it must be supported by a feedback (an input) to the brain (initiating the firing off of another set of synchronized neurons), which will create a desired response (an output). So, awareness (sentience) is the ability to know, perceive, feel, recognize and act on events. A brick does not have any perception or feeling of anything, so it is unaware of what happens to it and to its environment. But all animals are aware, e.g. an animal escaping from chasing a lion.

Such an approach to the nature of consciousness provides a clearer view on a number of questions in this area. For example, it allows for a gradual development of consciousness over millennia of life’s evolution, which might have started with automatic, chemistry based responses, in plants ‘seeking’ best nutrients, i.e. being somewhat aware of the environment. In the animal world the level of awarness and then self-awareness would be directly correlated with the brain size relative to the body mass and complexity of neural connections. When these two parameters reached a tipping point, human consciousness was ignited in Homo sapiens and other humanoids, such as in the Neanderthal Man. If we accept this notion, then human consciousness, having physical strata and differing only from animal self-awareness because of a much higher level of complexity and self-organization of billions of stimuli and memory cells, can be replicated in a different than a biological strata, such as in ‘silicone’.

Therefore, the main assumption taken here is that once Superintelligence emerges, it will be possible that at some stage it will become a conscious agent.

Comments