Options to control AI – lessons from the Manhattan Project

London, 17/6/2023

(This is an extract from the book : Prevail or Fail – a Civilisational Shift to the World of Transhumans’ by Tony Czarnecki)

Before I introduce the proposed Superintelligence Development Programme (SUPROG), described in chapter 7, Part 2, I would like to discuss the similarities with the Manhattan Project, which should have really been called a Manhattan Programme, since it consisted of hundreds of projects.

The Manhattan Project produced the first nuclear weapon in World War II. It was directed by Major General Leslie Groves with Robert Oppenheimer its director, at the Los Alamos Laboratory. The project began in 1939 and grew to employ nearly 130,000 people at its peak, costing nearly US$2 billion. It developed two types of atomic bombs: a gun-type fission weapon and an implosion-type nuclear weapon. Little Boy, the first nuclear bomb, used uranium-235, and Fat Man used plutonium. The project also gathered intelligence on the German nuclear weapon project.

It was a massive and complex research and development project. Looking at the objective and the structure of what will ultimately become a Superintelligence Development Programme, we may make the following comparisons:

- Civilisational perspective. This is perhaps the most important similarity. The Manhattan project was to save civilisation from possible derailment, unknown to humans. Had Hitler won the war, Humanity would be split into the ‘Untermenschen’, sub-humans, and the Arian race, the rulers of the world.

- SUPROG (Superintelligence Development Programme). If this Programme fails it may have all the negative consequences already mentioned,

- Collaboration and coordination: The success of the Manhattan Project was largely due to a close collaboration and coordination between scientists, engineers, and military personnel from different countries, institutions, and backgrounds. The project required the pooling of resources, expertise, and information from a wide range of sources, and the ability to work together towards a common goal.

SUPROG. It must become exactly that – a global programme, consolidating all available resources under one roof.

- Technological innovation: The Manhattan Project was a major driver of technological innovation, particularly in the fields of nuclear physics, chemistry, and engineering. The project required the development of new materials, methods, and processes, as well as the design and construction of large-scale facilities and equipment. The technical challenges faced by the project led to the development of modern technologies that have had lasting impact in many fields.

- SUPROG. The similarities with the Manhattan Projects are obvious. However, what differs this Superintelligence Development Programme is that it will be developed in an environment, which will change at a nearly exponential pace.

- Ethical considerations: The Manhattan Project raises important ethical considerations around the development and use of nuclear weapons. The use of atomic bombs on Hiroshima and Nagasaki resulted in significant human suffering and raised questions about the morality of using such weapons in warfare. The ethical issues surrounding the development and use of nuclear weapons continue to be relevant today, and the Manhattan Project serves as a reminder of the importance of considering the ethical implications of scientific and technological advances.

- SUPROG. The ethics of AI is coming to the fore because of the most recent examples of ChatGPT and other LLM models generating biased content. But the difference is that we must not only ensure that the AI-produced content is biased free, but that we maintain control over the AI’s self-development, based on human-compatible values and goals.

- National security and international relations:, The Manhattan Project also highlights the importance of national security and international relations in shaping scientific research and development. The project was driven by the fear of Germany developing its own atomic bomb, and the geopolitical tensions of the time influenced the decisions around the development and use of nuclear weapons.

- SUPROG. It has to be seen as an international initiative aimed at maintaining the control of AI. However, like during the WWII with Germany and Japan being the key adversaries, we may potentially be dealing with countries, which may use the most advanced AI in order to rule the world.

- Military-type control. The whole Manhattan Project was run by Major General Leslie Groves. It was wartime, so it was almost obvious. However, because it was the military, and not the scientific organization which was running it, the supply of the necessary resources had to be, and was delivered, on time.

- SUPROG. I cannot emphasize it strongly enough how important it is to run the one AI programme like a military campaign in a situation that resembles a pre-war period. You may be surprised by that comparison, but that what it really is. We are starting to fight the first battle for the control of AI, so that it does not become malicious. But if AI gets of our control, there may be a real war to get it back under our control and we may not win it.

- Deadlines. Manhattan Project achieved its goal of constructing a nuclear bomb just months before the Germans did it.

- SUPROG. Controlling AI development will only be successful if all the deadlines are met.

These are the lessons we can learn from the Manhattan Project. But there is an additional problem with controlling AI. We need to convince the public and most importantly, the world leaders, that such an invisible threat is real. One may call a maturing Superintelligence ‘an invisible enemy,’ assuming it turns out to be hostile towards humans, similarly as the Covid-19 pandemic was. Calling Covid an invisible enemy was an excuse used by governments saying that it was not possible to see that the threat was coming, hence they were not responsible for the consequences. Governments seldom see that spending money now minimizes the risk of potential future disasters. It should be seen as a long-term insurance policy. But for most governments long-term policies are not attractive since their horizon is at best the next election. The implications of such short-termism may be profound since this invisible existential threat may materialize in the long-term. However, to mitigate that risk, a global, continuous AI development control must start right now.

The second problem is that not many AI experts are willing to say when AGI will emerge, which may also be the time when humans lose control over AI. AI scientists and top AI practitioners prefer not to specify such time, using instead more elusive terms like ‘in a few decades or so.’ However, without setting a highly probable time when we may lose control over AI, the world leaders will not feel obliged to discuss this existential risk for humans, which such a momentous event may trigger.

Therefore, those who see that problem, should be bold enough to spell out the most likely time and justify it. Ray Kurzweil is an exception, saying in June 2014: “My timeline is computers will be at a human level such that you can have a human relationship with them, 15 years from now” [32] (by 2029). Since then, he has been sticking to that date. I am broadly in line with Kurzweil’s prediction and have assumed that AGI will emerge by 2030. You will find the arguments pros and cons that date further on in this book.

Kurzweil’s credibility is further confirmed by his prediction on the emergence of Superintelligence. In an interview with ‘Futurism’ in May 2017, he said it may emerge by 2045 [33]. At the AI conference in 1995, the participants estimated that it may emerge in two hundred years [34]. But four averaged surveys of 995 AI professionals published in February 2022 indicate that the most likely date for the arrival of a mature Superintelligence is about 2060, just 15 years after the Kurzweil’s prediction [35]. In any case, if his predictions are correct, most people living today will be in contact with Superintelligence, which may be our last invention, as the British mathematician I. J. Good observed in 1966.

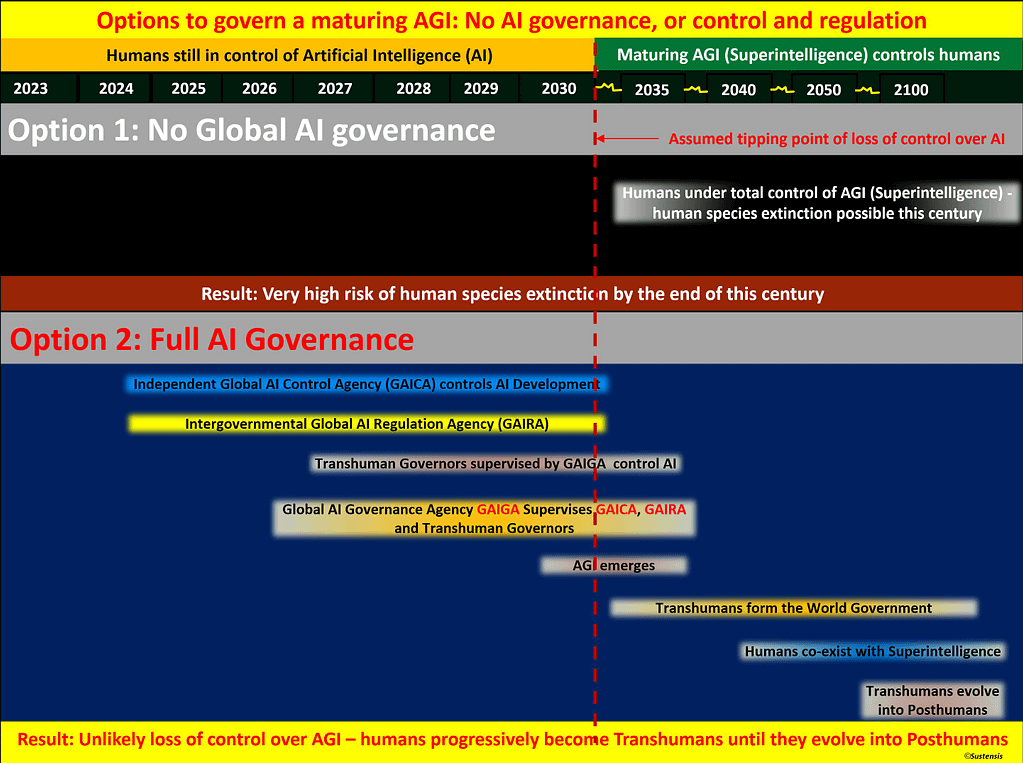

So, what are the options to control AI development? Just two. Option 1 is having no control and option 2 to have a controlled development of AI.

But option 2 is not only to have a process of control but ensuring that the process is effective which requires making very tough decisions and making them on time. This last condition is difficult to fulfil. Hence many people think it may already be too late to deliver a matured AI, which will be human compatible, i.e., human friendly. Whichever means of controlling AI we apply, they will vary, depending on the agreed deadlines, available resources, organizational requirements, or legislative constraints. I have summarized these options in the next two sub-tabs.

Tony Czarnecki is the Managing Partner of Sustensis, a Think Tank on Civilisational Transition to Coexistence with Superintelligence. His latest book: ‘Prevail or Fail – a Civilisational Shift to the World of Transhumans’ is available on Amazon: https://www.amazon.co.uk/dp/B0C51RLW9B.

Comments