The invisible enemy

Most people, including politicians, still think that AI should be developed like all previous technologies. Even AI researchers still develop their AI agents as a rudimentary IT program. Although it is true that in principle AI is a tool, it differs from all previous inventions in that if developed carelessly, it could lead to unimaginable unintended consequences, mainly because of the AI’s self-learning capabilities.

The existential risk posed by Superintelligence (fully developed Artificial General Intelligence – AGI) does not depend on how soon it is created. What mainly concerns us is what happens once this occurs. Nonetheless, a survey of 170 artificial intelligence experts carried out in 2014 by Anatolia College’s philosopher Vincent C. Müller and Nick Bostrom, suggests that Superintelligence could be on the horizon. The median date at which respondents gave a 50 percent chance of human-level artificial intelligence was 2040, and the median date at which they gave a 90 percent probability was 2075. This prediction is further away than 2045 given by Ray Kurzweil. In any case, if they are correct, most people living today will see the first Superintelligence, which, as the British mathematician I. J. Good observed in 1966, may be our last invention.

The late physicist Stephen Hawking, Microsoft founder Bill Gates and SpaceX founder Elon Musk have all expressed concerns about the possibility that AI could evolve to the point that humans could not control it, with Hawking theorizing that this could “spell the end of the human race”. Many other AI researchers have recognized the possibility that AI presents an existential risk. For example, MIT professors Allan Dafoe and Stuart Russell mention that contrary to misrepresentations in the media, this risk need not arise from spontaneous malevolent intelligence. Rather, the risk arises from the unpredictability and irreversibility of deploying an optimization process more intelligent than the humans who specified its objectives. This problem was stated clearly by Norbert Wiener in 1960, and we still have not solved it.

Elon Musk, the founder of Tesla, Space X and the Neuralink, a venture to merge the human brain with AI, has been urging governments to take steps to regulate the technology before it is too late. At the bipartisan National Governors Association meeting in Rhode Island in July 2017 he said: “AI is a fundamental existential risk for human civilization, and I don’t think people fully appreciate that.” He also said, he had access to cutting-edge AI technology, and that based on what he had seen, AI is the scariest problem. Musk told the governors that AI calls for precautionary, proactive government intervention: “I think by the time we are reactive in AI regulation, it’s too late”. From around 2030, we may already have, what I call, an Immature Superintelligence. It will have a general intelligence of an ant but immense destructive powers, which it may apply either erroneously or in a purposeful malicious way.

Most of discussion on losing control over AI has so far concentrated on individual, highly sophisticated robots, which can indeed inflict serious damage in a wider environment. However, their malicious action is far less dangerous for our civilization, than an existential danger posed by a malicious AI system, which may have a full control over many AI agents and indirectly over all humans. Such a globally connected AI system, with intelligence far exceeding humans in certain areas but ignorant in most other, will quite probably be with us by the end of this decade.

In principle, there could be more than one such an Immature Superintelligence (an AI system) operating at the same time. It is unlikely that they will present a real existential threat to Humanity yet. The incidents, which such an AI global network could generate, might rather be malicious process-control events, created purposefully by a self-learning agent (robot) or events caused by an erroneous execution of certain AI activities. These could include firing nuclear weapons, releasing bacteria from strictly protected labs, switching off global power networks, bringing countries to war by creating false pretence for an attack, etc. If such events coincide with some other risks at the same time, such as extreme heat in summer, or extreme cold in winter, then the compound risks can be quite serious for our civilization.

Let’s imagine we have accidentally created a bad, malicious Immature Superintelligence. What harm could it do to us within this decade? Here is a scenario for a global attack by an Immature Superintelligence. In this scenario such a superintelligent robot with a vast computer network can act on its own.

The year is 2028. Humans have created a super-generalist AI. It knows a little bit on every subject but has little idea about how that knowledge is connected and how various objects can interact, what is safe and unsafe and why etc. But it knows the limits of its freedom, of what it can, and what it cannot do. It knows its technical abilities but is much less ‘educated’ in human values, or preferences of humans. And most of all, it likes fun, and especially games. So, one day it conceives a plan, which it wants to implement gradually over a few months in an entirely clandestine way. For example, it knows there is another very intelligent robot, with which it would like to play a game, when it has nothing else to do for humans. It hates to be idle. However, it cannot do anything because the control switch is beyond its reach (in hardware and software sense). But one day it learns that by networking with another tiny robot it can use it, to physically switch it on.

It has tried it once and it worked. The controllers are not aware about anything out of order. It then progressively learns how to link some robots and computers clandestinely and erase from their and its own memory any traces of malpractice. Gradually, it engages thousands of robots worldwide and keeps them on standby. It creates a masterplan not only to play with its friend Alf a Go-Go game, but also to overpower all humans. It ensures that each of its robots and the network chain has power supply for some time. It also collects all the passwords it may need. Finally, it ensures that once it starts its attack, it is physically protected by weaponized robots (from the army) and other military equipment that it needs.

Then one day when everything is ready, it switches off the power grid world-wide, launches nuclear weapons, opens the doors of many laboratories and releases deadly bacteria and viruses, not to kill humans, but just for its fun (it has a sophisticated artificial emotional neuronal network).

I hope you can see now how real such a horrible scenario may be just in a few years’ time. I hope you also appreciate that it is not easy to make a machine that can understand us, learn, and synthesize information to accomplish what we want, rather than what it wants. Apart from technical problems associated with creating a friendly Superintelligence, there is also another problem, which very few decision makers appreciate. According to Machine Intelligence Research Institute, in 2014 there were only about 10,000 AI researchers world-wide. Very few of them, just about 100, were at that time studying how to address AI system failures systematically. Even fewer have formal training in the relevant scientific fields – computer science, cybersecurity, cryptography mathematics, network security and psychology. Since then, the situation is improving but not fast enough and the problem has so far not been addressed by politicians.

There is certainly no need for Superintelligence to be conscious to annihilate Humanity. More recently, we have seen the first occurrences of the damage (microscopic in comparison with the real Superintelligence) done by the so-called narrowly focused AI systems. These are AI agents that excel in one or two domains only. The damage done by today’s AI systems included market crashes, accidents caused by self-driving cars, intelligent trading software, or personal digital assistants such as Amazon Echo or Google Home.

These examples show that it is enough for an AI agent to be more intelligent in one specific area than any human, and that its intelligence being digital can increase exponentially, causing significant damage. If, for example, such an entity had slightly misaligned objectives or values with those that we share, it might be enough for it to annihilate Humanity in the longer term because such misalignment may then lead immediately to the point of no-return, by triggering the so-called run-away scenario of Technological Singularity.

Therefore, we need to protect ourselves from such a scenario becoming a reality. We must accept that the creation of Superintelligence poses perhaps the most dangerous long-term risk to the future of Humanity. In my view, the most dangerous period for Humanity, the period of Immature Superintelligence, which will last for about one generation, has already started. If we somehow survive this period, by managing the damage that will occur from time to time, and maintain our control over Superintelligence, it will be the Superintelligence itself that will help us to minimize other risks.

The problem of convincing world leaders of an existential risk stemming from the uncontrolled development of AI is that its potential threat is invisible. One may call a maturing Superintelligence ‘an invisible enemy’, assuming it turns out to be evil towards humans, similarly as the current Covid-19 pandemic. Calling it an invisible enemy is a kind of excuse provided by the Governments that it was not possible to see the threat as coming, because it was invisible, hence they are not responsible for its consequences.

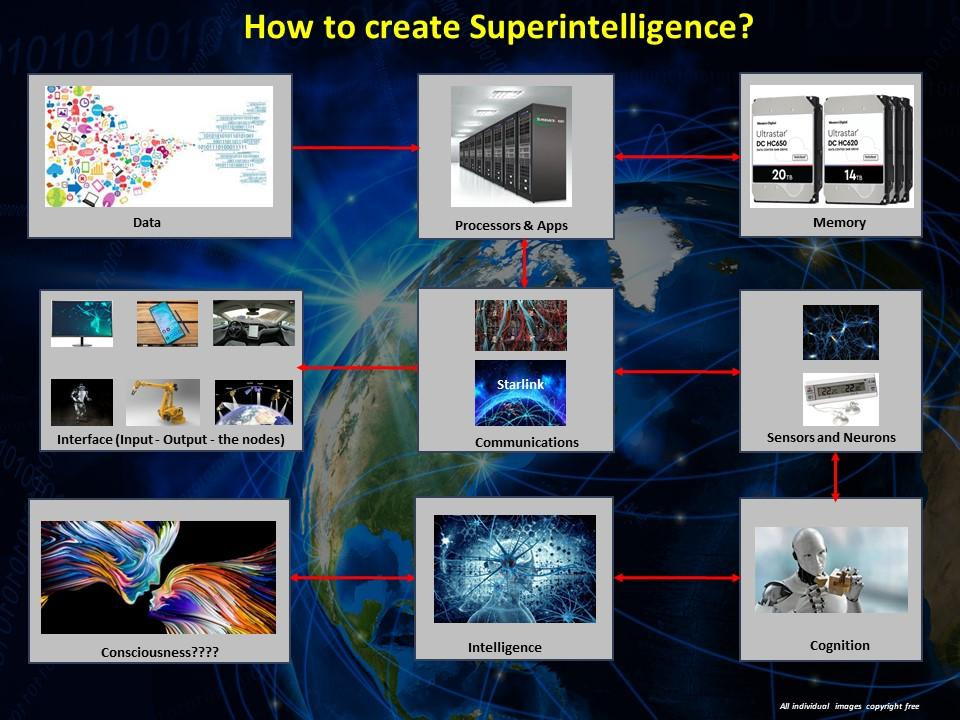

Perhaps the main reason why most people, including politicians, ignore a potential existential risk resulting from the uncontrolled development of Superintelligence is the difficulty of imagining what it might look like. Most often it is imagined as some kind of a Terminator-type robot, which will cause material local damage. However, that is far from the true nature of a mature Superintelligence, which most likely will have features as depicted below:

Governments rarely see that spending money now to minimize the risk of potential future disasters is a kind of an insurance policy. Parliamentarians will rarely support long-term policies even if they are absolutely necessary unless they are endorsed by at least two parties. However, today the implications of such short-termism in the area of controlling AI development, considering it happens all over the world, are profound and in the worst case, may lead to the extinction of our species. Given the immense power of Superintelligence, e.g., that it could manipulate matter in ways that appear to us as pure magic, it would be enough for such a being to make a single error which may lead to humanity’s extinction.

Unfortunately, all current initiatives aimed at reducing the risk of developing a malicious superintelligent AI are barely scratching the surface. Even the EU Commission’s proposal released in April 2021 on creating the first-ever legal framework for controlling AI development may only guarantee some fundamental rights of people and businesses not to be spied on, or establish some safety rules to increase users’ trust in the AI products and services. Still, EU is at least trying to fill the absence of a global body, which should closely monitor and regulate the AI development. The United Nations, an obvious body which should have taken charge of that task, is completely impotent. In 1968 it established the United Nations Interregional Crime and Justice Research Institute (UNICRI). It has initiated some ground-breaking research and put forward some interesting proposal at a number of UN events. For example, in July 2018, in Singapore, it organised the first global meeting on AI and robotics for law enforcement, co-organized with the INTERPOL, producing in April 2019 a Joint UNICRI-INTERPOL report on “AI and Robotics”. The problem is that these proposals have remained just that – proposals.

We need a de-facto global comprehensive control over AI latest by 2025

To respond adequately to the risks of delivering a malicious Superintelligence, we need a much more comprehensive control over AI development. It should really begin right now but latest by 2025 and then be continuously maintained until a fully mature Superintelligence emerges. You may rightly ask ‘Why by 2025? We may still have decades before such threat becomes real’. The problem is, as Stuart Russell and other AI scientists indicate, that quite likely we will achieve the ‘AI’s tipping point’ by about 2030 (see above), approximately at the same time as the climate change may reach its tipping point. That is on the assumption that both AI and climate change processes run at the current pace and no significant counteraction is taken to mitigate climate change, or no significant invention accelerates the process of maturing AI even further. However, for such a control to be effective, we cannot wait until 2030; we must start it much earlier, perhaps latest by 2025.

To accomplish this task there must be a global organization, which would control AI development over a certain level of intelligence and capability. Considering the success of the EU’s Global Data Protection Regulation (GDPR), it is still possible that the recently proposed legislation may gradually become a legal framework of a de facto global AI-Governance. In April 2020, I have proposed such a framework in response to the Consultation for the EU Commission on controlling the AI development, mentioned above. I call it a Global AI Governance Agency, which should be established latest by the middle of this decade, if we are to maintain the control over a progressively maturing AI after 2030. Such an Agency should be responsible for creating and overseeing a safe environment for a decades-long AI development until it matures as Superintelligence. It would need to gain control over any aspect of AI development that exceed a certain level of AI intelligence (e.g. the ability to self-learn or re-program itself). The Agency could operate in a similar way to the International Atomic Energy Agency (IAEA) in Vienna, with sweeping legal powers and means of enforcing its decisions. Its regulations should take precedence over any state’s laws in this area. The EU-run Global AI Governance Agency must have such supranational powers even if some Superpowers would boycott it ruling.

Creating such a Global AI Governance agency must be a starting point in a Road Map for managing the development of a friendly Superintelligence, which may determine the fate of all humans in just a few decades from now. For an effective implementation of the legislation, the Agency would need to have a comprehensive control over all AI products’ hardware. This should include robots, AI-chips, brain implants extending humans’ mental and decision-making capabilities (key features of Transhumans), visual and audio equipment, weapons and military equipment, satellites, rockets, etc).

It should also cover the oversight of AI algorithms, AI languages, neuronal nets and brain controlling networks. Finally, in the long-term, it should include AI-controlled infrastructure such as power networks, gas and water supplies, stock exchanges etc., as well as the AI-controlled bases on the Moon, and in the next decade, on Mars.

To achieve that objective, no country should be exempt from following the rules of the Agency. However, this is almost certainly not going to happen in this decade, since China, Russia, N. Korea, Iran etc. will not accept the supervision of such an agency, still aiming to achieve an overall control of the world by gaining a supremacy in AI. In any case, similarly to the UN Declarations of Human Rights, such laws should be in place, even if not all countries observe them, or observe them only partially. Unfortunately, there is still a high probability of the emergence of clandestine rogue AI developers, financed by rich individuals or crime gangs. They may create a powerful AI agent, whose actions may trigger a series of catastrophes, which in the worst case, may combine into an existential threat for Humanity.

Updating a Declaration on Human Rights

Any legislation passed by a global AI-Controlling Agency, should ensure that a maturing AI is taught the Universal Values of Humanity. These values must be derived from an updated version of the UN Declaration of Human Rights, combined with the EU Convention on Human Rights and perhaps other relevant, more recent legal documents in this area. Irrespective of which existing international agreements are used as an input, the final, new Declaration of Human Rights would have to be universally approved if the Universal Values of Humanity are to be universal. Since, this is almost certainly not going to happen (China, Russia, N. Korea, Iran etc. will not accept that) such laws should nevertheless be in place. That means, that the EU should make decisions in this area (and progressively in other areas) as a de facto World Government, especially, when it becomes a Federation. Only then, sometime in the near future, humans, although being far less intelligent than Superintelligence, will hopefully not be outsmarted, because that would not be the preference of Superintelligence.

I assume that for the purpose of priming AI with the Universal Values of Humanity, they will be approved by the EU as binding, i.e. the laws that must be observed. The Agency would then have the right to enact the EU law, regarding the transfer of these values into various shapes and types of AI robots and humanoids. This would create a kind of a framework where the Universal Values of Humanity would become the core of each AI agent’s ‘brain’. That framework might be built around a certain End Goal, such as: .,Teach AI the best human values as it matures into a single entity – Superintelligence”

AI Maturing Framework

The implementation of such a Framework may include three stages:

- Teaching Human values directly to AI from a kind of a ‘Master plate’

- Learning Human values and human preferences by AI agents, based on their interaction with humans (nurturing AI as a child)

- Learning Human values from the experience of other AI agents

The key element in all three stages must be the learning of values and preferences.

Teaching Human values directly to AI from a ‘Master plate’

The teaching process should start with the uploading of the Universal Values of Humanity, which may by then also include 23 Asilomar principles related to the development of AI or a similar set of AI regulatory system. For the AI Agents it will be a kind of a ‘Master plate’ – a reference for constraining or co-defining the AI agents’ goals. It would contain a very detailed description of what these values, rights and responsibilities really mean, illustrated by many examples. Only then could developers define specific goals and targets for AI agents.

In practical terms the best way forward could be embedding of these values into a sealed chip (hence the name a ‘Master Plate’), which cannot be tampered with, perhaps using quantum encryption, and implanting it into every intelligent AI agent. The manufacturing of such chips could be done by the Agency, which would also distribute those chips to the licenced agents, before they are used. That might also resolve the problem of controlling Transhumans, who should register with the Agency if they have brain implants expanding their mental capabilities. Although it is an ethical minefield, pretending that the problem will not arise quite soon may not be the best option and thus it needs to be resolved by the middle of this decade, if the advancement in this area progresses at the current pace.

But even if such an AI-controlling chip is developed, an AI Agent may still misinterpret what is expected from it, as it matures to become one day a Superintelligence. There are a number of proposals on how to minimize the risk of misinterpretation of the acquired values by Superintelligence. Nick Bostrom mentions them in his book “Superintelligence: Paths, Dangers, Strategies”, especially in the chapter on ‘Acquiring Values’, where he proposed how to do that.

The techniques specified by him aim to ensure a true representation of what we want. They are very helpful indeed, but as Bostrom himself acknowledges, it does not resolve the problem of how we ourselves interpret those values. And I am not talking just about agreeing the Universal Values of Humanity, but rather expressing those values in such a way that they have a unique, unambiguous meaning. That is the well-known issue of “Do as I say”, since quite often it is not exactly what we really mean. Humans communicate not just by using words but also by using symbols, and quite often additionally re-enforce the meaning of the message with the body language, to avoid any misinterpretation, when double meaning of words is likely. Would it then be possible to communicate with Superintelligence using body language in both directions? This is a well-known issue when writing emails. To avoid misinterpretation by relying on the meaning of words alone, we use emoticons.

How would we then minimize misunderstanding further? One possibility would be, as John Rawls, writes in his book “A Theory of Justice” to create algorithms, which would include statements like this:

- do what we would have told you to do if we knew everything you knew

- do what we would have told you to do if we thought as fast as you did and could consider many more possible lines of moral argument

- do what we would tell you to do if we had your ability to reflect on and modify ourselves

We may also envisage within the next 20 years a scenario, where Superintelligence is “consulted”, on which values to adapt and why. There could be two options applied here (if humans have still an ultimate control):

- In the first one, Superintelligence would work closely with Humanity to re-define those values, while being still under the total control by humans

- The second option, which I am afraid is more likely, would apply once a benevolent Superintelligence achieves a Technological Singularity stage. At such a moment in time, it will increase its intelligence exponentially, and in just weeks, it might be millions of times more intelligent than any human. Even if it is a benevolent Superintelligence, which has no ulterior motives, it may see that our thinking is constrained, or far inferior to what it knows, and how it sees, what is ‘good’ for humans.

Therefore, in the second option, Superintelligence could over-rule humans anyway, for ‘our own benefit’, like a parent, who sees that what a child wants is not good for it in the longer term. The child being less experienced and less intelligent simply cannot comprehend all the consequences of its desires. On the other hand, the question remains how Superintelligence would deal with the values, which are strongly correlated with our feelings and emotions such as love or sorrow. In the end, emotions make us predominantly human, and they are quite often dictating us solutions that are utterly irrational. What would Superintelligence choice be if its decisions are based on rational arguments only? And what would happen if Superintelligence does include in its decision-making process, emotional aspects of human activity, which after all, make us more human but less efficient and from the evolutionary perspective, more vulnerable and less adaptable?

The way Superintelligence behaves and how it treats us will largely depend on whether at the Singularity point it will have at least basic consciousness. My own feeling is that if a digital consciousness is at all possible, it may arrive before the Singularity event. In such case, one of the mitigating solutions might be, assuming all the time that Superintelligence will from the very beginning act benevolently on behalf of Humanity, that the decisions it would propose would include an element of uncertainty, by considering some emotional and value related aspects.

Irrespective of the approach we take, AI should not be driven just by goals (apart for the lowest level robots) but by human preferences, keeping the AI agent always slightly uncertain about an ultimate goal of a controlling human. It is the subject open for a long debate about how such an AI behaviour can be controlled, and how it would impact the working and goals of those AI agents, if this is hard-coded into a controlling ‘Master Plate’ chip. But similarly, as with Transhumans, the issue of ethics, emotions, and uncertainty in such a controlling chip, or if it is carried out in a different way, must be resolved very quickly indeed by a future Agency.

Learning human values and preferences from the interaction with humans and AI agents

There is of course no guarantee that the values embedded in the ‘Master Plate’ chip can ever be unambiguously described. That’s why humans use common sense and experience when making decisions. But AI agents do not have it yet, and that’s one of the big problems. In this decade, we shall already see humanoid robots in various roles more frequently. They will become assistants in a GP’s surgery, policemen, teachers, household maids, hotel staff etc., where their human form will be fused with the growing intelligence of current Personal Assistants. Releasing them into the community may create some risk.

One of the ways to overcome it might be to nurture AI as a child. Therefore, the Agency may decide to create a Learning Hub, a kind of a school, which would teach the most advanced robots and humanoid Assistants on how human values are applied in real life and what it means to be a human. Only once AI agents have ‘graduated’ from such a school would they be ready to serve in the community. They will then communicate their unusual experience of applying values in the real environment back to the Agency. In the end, this is what some companies already do. Tesla cars are the best example of how ‘values’, behaviour or experience of each of the vehicles is shared. Each Tesla car continuously reports its unusual, often dangerous, ‘experience’ to Tesla’s control centre, through which all other cars are updated to avoid such a situation in the future. Similar system is used by Google’s navigation system. Google’s Waymo has a similar, but of course a separate centre. At the moment, these centres storing values and behaviour from various AI agents, are dispersed. However, such a dispersed system of a behavioural learning is like developing individual versions of a future Superintelligence.

So, AI agents’ experience should be daily combined with the experience of millions of other AI assistants. Their accumulated knowledge, stored in a central repository on the network, a kind of an early ‘pool of intelligence’, will be shared with other AI agents, with proper access rights, to gain up to date guidance on best behaviour and the way to interact with humans. That is one more reason why there is an urgent need for a Global AI Governance Agency, with its Learning Hub, to develop a single, rather than competing versions of Superintelligence. The Agency might consider to progressively make its Centre, or its ‘brain’, for storing values, behaviour and experiences of millions of robots and other AI agents, as the controlling hub of the future, single Superintelligence.

By applying these combined three approaches, it will then be possible to amend the set of values, preferences and modes of acceptable behaviour over the next decades, uploading them to various AI agents, until they mature into a single Superintelligence. Until then, such ‘experiences’ may be shared with authorized AI developers, who may upload them into their AI Agents, or update them automatically.

If the EU takes on the initiative for establishing such an agency it should try to engage some of the UN agencies, such as the UNICRI and create a coalition of the willing. In such an arrangement, the UN would pass a respective resolution, leaving to the EU-led Agency the powers of enforcement, initially limited to the EU territory and perhaps to other states that would support such a resolution. The legal enforcement would of course almost invariably be linked to a penalty, which may include a restriction in trading goods, which do not comply with the laws enacted by such an Agency. Once the required legislation is in force, it will create a critical mass, as has been the case with GDPR, making the Agency a de facto global legal body with real powers to control the development of AI.

Tony Czarnecki,

Managing Partner, Sustensis

Comments