The assumption I have made earlier regarding the control of the maturing process of AI was that there will be a Global AI Governance Agency, such as European Artificial Intelligence Board (EAIB), which would have a tight control over the ever more intelligent AI. I mentioned there that at least two conditions must be met for such an approach to be successful: It must be a global control, and the values, which we will pass over to Superintelligence, must be Universal Human Values, i.e., accepted by almost every country in the world. And it is here when we may have a big problem, since such an Agency must be in place starting from about 2025 but latest by 2030. To achieve those objectives would require that by the middle of this decade we would have one large democratic organization, such as the European Federation, based on new principles of democracy. Furthermore, such an organization would have to agree to act on behalf of the entire Humanity. Most people, me included, doubt if this is at all possible. Therefore, whilst we should strive to achieve such a goal, we have to prepare an alternative.

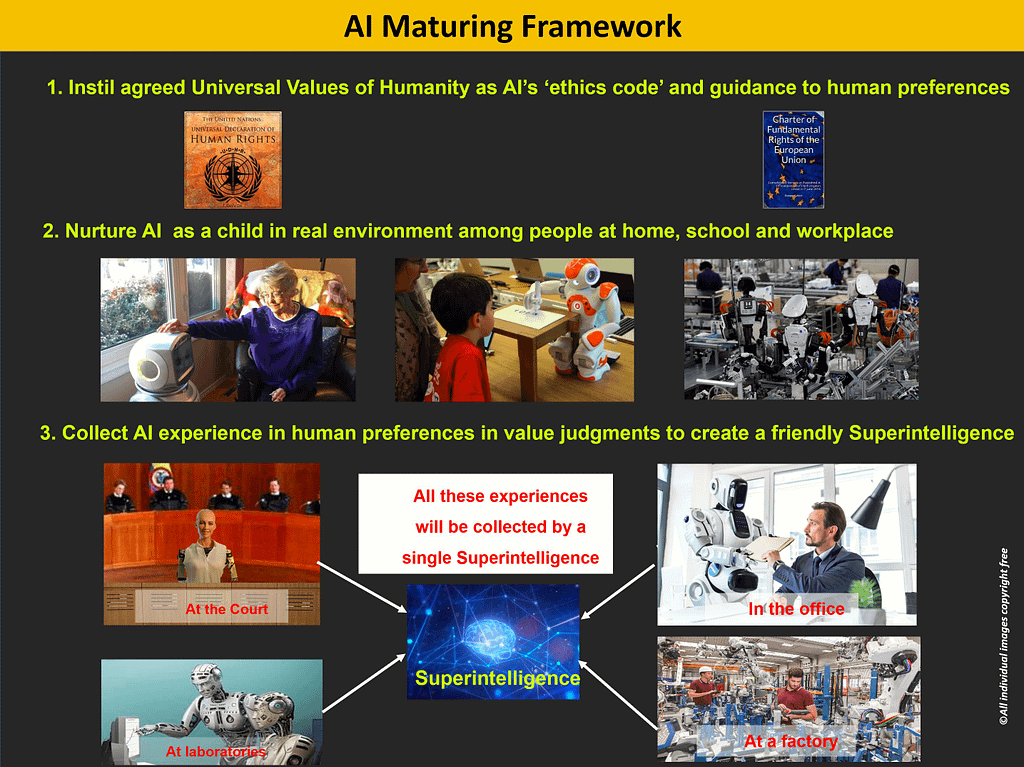

I assume that for the purpose of priming AI with the Universal Values of Humanity, they will be approved by the EU as binding. If the EAIB Agency does indeed start to operate in a few years’ time, it would then have the right to enact the EU law, regarding the transfer of these values into various shapes and types of AI robots and humanoids. This would create a framework for the Universal Values of Humanity becoming the core of each AI agent’s ‘brain’. That framework might be built with a certain End Goal, such as:

Teach AI the preferred human values until it matures into a single entity – Superintelligence.

Note, that I have used the word ‘preferred’ rather than ‘best’ since this would always leave a margin of uncertainty into the Superintelligence’s actions. This, as Stuart Russell suggests in his book ‘Human Compatible’, might significantly reduce the risk of making wrong decisions by any AI agent. The implementation of that Framework may include three stages:

- Teaching Human values to AI directly from a ‘Master plate’, which would be hard-coded into any chip of AI robots, tools or other AI infrastructure, like power network controllers

- Nurture AI as a child – learning human values and preferences by AI agents, based on their interaction with humans (nurturing AI as a child)

- Learning Human values by AI Agents from the experience of their ‘peers’

The key element in all three stages must be the learning of values and preferences. This could be done by creating a kind of a Maturing Framework, with three components described below.

1. Teaching Human values to AI directly from a ‘Master plate’

In practical terms the best way forward could be to embed these values into a sealed chip (hence the name a ‘Master Plate’), which cannot be tampered with, perhaps using quantum encryption, and implant it into every intelligent AI agent. The manufacturing of such chips could be done by the controlling agency, such as the proposed EAIB Agency, which would also distribute those chips to the licenced agents, before they are used. That might resolve the imminent problem of controlling Transhumans as well, who should be first registered with such an Agency but only if they have brain implants, expanding their mental capabilities. Although it is an ethical minefield, pretending that the problem will not arise quite soon may not be the best option and thus it needs to be resolved by the middle of this decade, if the advancement in this area progresses at the current pace.

There is of course no guarantee that the values embedded into the ‘Master Plate’ chip can ever be unambiguously described. That’s why humans use common sense and experience when making decisions. But AI agents do not have it yet, and that is one of the big problems. In this decade, we shall see humanoid robots in various roles more frequently. They will become assistants in GP’s surgeries, policemen, teachers, household maids, hotel staff etc., where their human form will be fused with the growing intelligence of current Personal Assistants. Releasing them into community may create some risk.

2. Nurture AI as a child

One of the ways to overcome it might be to nurture AI as a child. Therefore, the Agency, such as EAIB, may decide to create a Learning Hub, a kind of a school, which would teach the most advanced robots and humanoid Assistants on how human values are applied in real life and what it means to be a human. In such a school, the robots will interact with people in various areas of human activity, such as school, factory, office, cinema, shop, museum, etc. They will then communicate back their unusual experience of applying values in the real environment back to the Agency, where such experience will be combined with the experience of millions of other AI assistants. Only once AI agents have ‘graduated’ from such a school would they be ready to serve in the community. Additionally, their accumulated knowledge, stored in a central repository on the network, a kind of an early ‘pool of intelligence’, will have a gateway, through which each of these AI agents, with proper access rights, will be able to update itself, or be updated, to gain up to date guidance on a proper behaviour and the way to react to humans’ requests.

3. Learning Human values by AI Agents from the experience of their ‘peers’

Finally, the AI agents will learn human values, and especially preferences in choices and behaviour, by directly sharing their own experience with other AI Agents. In the end, this is what some companies already do. Tesla cars are the best example of how ‘values’, behaviour, or experience of each of the vehicles is shared. Each Tesla car continuously reports its unusual, often dangerous, ‘experience’ to Tesla’s control centre, through which all other cars are updated to avoid such a situation in the future. Google’s Waymo has a similar, but of course a separate centre. You yourself use such a system already when driving your car with the help of Google’s navigation system. The cars in front of you immediately inform the Centre of the traffic situation on a given road and, in a few seconds, it is relayed to the cars further down that road. Right now, these Centres are storing values and behaviour from various AI agents, are dispersed, each centre supporting an individual AI Company’s ‘micro’ Superintelligence. For the purpose of maturing Superintelligence, there should be just one such a global centre.

That is one more reason why there is an urgent need for a Global AI Governance Agency, like the proposed EAIB, with its Learning Hub, to develop a single, rather than competing versions of Superintelligence. The Agency might consider to progressively make its Centre, or its ‘brain’ for storing values, behaviour and experiences of millions of robots and other AI agents, as the controlling hub of the future, single Superintelligence.

The benefits of AI Maturing Frameowrk

By applying the combined three approaches, it may be possible to amend the set of values, preferences, and modes of acceptable behaviour over the next decades, by uploading them to various AI agents, until they mature into a single Superintelligence. But even if such an AI-controlling Master chip is developed, an AI Agent may still misinterpret what is expected from it, as it matures to become a Superintelligence.

There are several proposals on how to minimize the risk of misinterpretation of the acquired values by Superintelligence. Nick Bostrom mentions them in his book “Superintelligence: Paths, Dangers, Strategies”, especially in the chapter on ‘Acquiring Values’, where he proposed how to do that. The techniques specified by him aim to ensure a true representation of what we want. They are very helpful indeed, but as Bostrom himself acknowledges, it does not resolve the problem of how we ourselves interpret those values.

Therefore, we would need not only agree the Universal Values of Humanity, but also express those values in such a way that they have a unique, unambiguous meaning. That is the well-known issue of “Do as I say”, since quite often it is not exactly what we really mean. Humans communicate not just by using words but also by using symbols, and quite often additionally re-enforce the meaning of the message with the body language, to avoid any misinterpretation, when double meaning of words is likely. Would it then be possible to communicate with Superintelligence using body language in both directions? You may have come across this problem when writing emails. To avoid misinterpretation by relying on the meaning of words alone, we use emoticons.

How would we then minimize misunderstanding our preferences further? One possibility would be, as John Rawls, writes in his book “A Theory of Justice” to create algorithms, which would include statements like this:

- do what we would have told you to do if we knew everything you knew

- do what we would have told you to do if we thought as fast as you did and could consider many more possible lines of moral argument

- do what we would tell you to do if we had your ability to reflect on and modify ourselves

In the next 20 years, we may also envisage a scenario, where Superintelligence is “consulted”, on which values to adapt and why. This has already been demonstrated in 2019 by the IBM’s Political Debater using its Watson Assistant. There could be two options applied here (if humans have still an ultimate control):

- In the first one, Superintelligence would work closely with Humanity to re-define those values, while being still under the total control by humans

- The second option, which I am afraid is more likely, would apply once a benevolent Superintelligence achieves a Technological Singularity stage. At such a moment in time, it will increase its intelligence exponentially, and in just weeks, it might be millions of times more intelligent than any human. Even if it is a benevolent Superintelligence, which has no ulterior motives, it may see that our thinking is constrained, or far inferior to what it knows, and how it sees, what is ‘good’ for humans.

Therefore, following the second option, Superintelligence could over-rule humans anyway, for ‘our own benefit’, like a parent, who sees that what a child wants is not good for it in the longer term. The child being less experienced and less intelligent simply cannot comprehend all the consequences of its desires. On the other hand, the question remains how Superintelligence would deal with the values, which are strongly correlated with our feelings and emotions such as love or sorrow. In the end, emotions make us predominantly human, and they are quite often dictating us solutions that are utterly irrational. What would Superintelligence choice be if its decisions are based on rational arguments only? And what would happen if Superintelligence does include in its decision-making process, emotional aspects of human activity, which after all, make us more human but less efficient and from the evolutionary perspective, more vulnerable and less adaptable?

The way Superintelligence behaves and how it treats us will largely depend on whether at the Singularity point it will have at least basic consciousness. My own feeling is that if a digital consciousness is at all possible, it may arrive before the Singularity event. In such a case, one of the mitigating solutions might be, assuming all the time that Superintelligence will from the very beginning act benevolently on behalf of Humanity, that decisions it proposes would include an element of uncertainty, by considering some emotional and value-related aspects.

Irrespective of the approach we take, AI should not be driven just by goals (apart for the lowest level robots) but by human preferences, keeping the AI agent always slightly uncertain about a goal of a controlling human. It is the subject for a long debate about how such an AI behaviour can be controlled, and how it would impact the working and goals of those AI agents, if this is hard coded into a controlling ‘Master Plate’ chip. But similarly as with Transhumans, the issue of ethics, emotions, and uncertainty in such a controlling chip, must be resolved very quickly. In this context the proposed setting up of the European Artificial Intelligence Board is really welcome. However, by the time it starts operating, its legislation may already be obsolete because of new AI capabilities not envisaged today.