Taking Control Over AI before It Starts Controlling Us

London, 17/6/2023

(This is an extract from the book : Prevail or Fail – a Civilisational Shift to the World of Transhumans’ by Tony Czarnecki)

Whether AGI arrives by 2030 largely depends on the continuous increase of the computer power and performance improvement in the related hardware and software. Based on the recent progress in that area, my prediction of AGI being more intelligent than humans by 2030 may still be rather too cautious. Here are some of the most significant developments over the last 15 years, which impact the whole AI sector, not just an individual product or service:

- 2006 – Convoluted Neural Nets, for image recognition (Fei Fei Li)

- 2016-AlphaGo – Supervised ML, Monte Carlo, Tree Search + neural networks (DeepMind)

- 2017-AlphaZero – Unsupervised ML (DeepMind)

- 2017-Tokenized Self-Attention for NLP – Generative Pre-trained Transformers (GoogleBrain)

- 2021-AlphaFold – Graph Transformers (graphs as tokens) predicting 3D protein folding (GoogleBrain)

- 2022 (March) – Artificial neurons based on photonic quantum memristors (University of Vienna)

- 2022 (2 April) – White Box – Self-explainable AI, Hybrid AI (French Nukka lab)

- 2022 (4 April) – PaLM, Pathways Language Model, NLP with context and reasoning (Google Research)

- 2022 (11 May) – LaMBDA –multi-modal AI agent – can also control robots with NLP (Google)

- 30 November 2022 – ChatGPT, the first publicly accessible AI Assistant, which has almost overnight made an average person aware what a ‘real’ AI, immensely more capable than Alexa, can do.

- 7 February 2023 – Microsoft’s Bing Chat and Google’s Bard are announced, linking for the first time Large Language Models (LLM) such as ChatGPT to the Internet Browsers, such as Bing or Google.

- 1 March 2023 – Microsoft releases Kosmos 1 – first multimodal “Universal Assistant” capable of operating in 15 modes.

- 7 March 2023 – Google’s PaLM-E is the first generalist robot using a multimodal embodied visual-language model (VLM), which can perform a variety of tasks without the need for retraining.

- 12 May 2023 – Anthropic releases its ChatGPT like Assistant called Claude, which however is about 20 times more powerful, faster, less complex, and cheaper to operate.

If anybody had any doubt how fast AI can advance, then 2022 is the best example. The number of fundamental discoveries and inventions in AI in 2022, quoted above, was the highest ever. But there are two events, which will impact our daily life most and increase the risk in the AI area even further and faster. The first one was the release of ChatGPT. It was a truly watershed moment. For the first time, the capabilities of the most advanced AI agent can now be accessed by anyone, rather than by only the top AI specialists. Then the second pivotal moment came in February 2023 when Microsoft and Google released an even more advanced AI Assistants BingChat and Bard.

Fundamental improvements happen now in months, rather than in years. Kosmos-1 deserves a closer look at its potential impact of the way such progress was achieved. Microsoft researchers introduced it in just three months after the release of ChatGPT. It is a multimodal AI Assistant, which can analyse images for content, solve visual puzzles, perform visual text recognition, pass visual IQ tests, and understand natural language instructions. [43] The researchers believe that by integrating different modes of input such as text, audio, images, and video, is a key step to building AGI, which can perform general tasks at the level of a human.

OpenAI in tandem with Microsoft seem to be leading that super-fast progress in AI capabilities and they do it now with even greater ease. The need to write complex algorithms to achieve further improvement becomes less frequent because the most advanced companies begin to achieve stunning results by just combining existing standalone modules into more complex units. Artificial Narrow Intelligence (ANI) is advancing towards Artificial General Intelligence (AGI) by assembling existing pieces like building a children’s castle from LEGO blocks. To build their most recent Universal AI Assistant, Microsoft combines ChatGPT with Visual Foundation Models, such as Visual Transformers or Stable Diffusion, so that the chatbot could understand and generate images and not just text. So far, they have combined 15 different modules and tools, which allow a user to interact with ChatGPT by:

One of the primary goals of that research team has been to make ChatGPT more “humanlike” by making it easier to communicate with and being more interactive. Additionally, the team has been trying to teach it handling complex tasks, which require multiple steps.

Even more interestingly, no extra training was carried out. All tasks were completed using prompts, i.e., text commands, entered by the developers and fed into ChatGPT, or ChatGPT created and fed them itself into other models. The research team is also investigating the possibility of using ChatGPT to control other AI Assistants. That would create a “Universal Assistant”, which could handle a variety of tasks, including those that require natural language processing, image recognition within a multi-step process. [44]

We have already some insights of how AGI could work at a basic level even now, although of course it is not yet AGI. In April 2023, a GitHub user with a licenced GPT-4, which is able to access the Internet in real time, significantly expanded its capabilities by creating Auto-GPT, an autonomous AI Assistant. A user provides the app with an objective and a task and there are a few agents within the program, including a task execution agent, a task creation agent, and a task prioritization agent, which will complete tasks, send results, reprioritize, and send new tasks. The significance of this research lies in demonstrating the potential of AI-powered language models to autonomously perform tasks within various constraints and contexts. But it has opened a new complex issue, showing how such sophisticated LLM models can self-learn and produce unexpected results, which could be very beneficial but also malicious in the hands of a criminal user. This leads me to two conclusions.

The first one is that AGI will almost certainly emerge by 2030. This means that I am in line with about 61% of AI specialists who concluded in the poll, conducted in March 2023 by Lex Friedman, MIT AI Scientist that AGI would arrive within a decade.

The probability that AGI may indeed arrive by 2030 increases even further if we include usable quantum computers, which should be available in 2-3 years’ time, significantly increasing the processing power for some calculations. Therefore, we should consider 2030 as the AI’s tipping point when it may be outside of human control. Moreover, there may be several AGI systems by the end of this decade, which may even fight each other, if deployed by some psychopathic dictators, hoping to achieve AI Supremacy and use it to conquer the world.

The second conclusion I would draw is that the kind of AGI, which emerges in several years’ time may not be delivered in the way we imagine. It will almost certainly not ‘think’ in the way we do, although the indications are there will be many similarities. When we will be comparing the outcome of AGI ‘thinking’ and decision making we will find that AGI’s intelligence, although working in different ways, is superior to most humans.

There is of course no scientific proof that we will lose control over AI by 2030. But neither is there any scientific proof that the global warming tipping point of 1.5C temperature increase will happen by 2030, if we do not radically constrain CO2 emissions. Similarly, it is not so important, who specifies a concrete date for the emergence of AI, but that such a date is widely publicised and supported by eminent AI scientists. For example, it was argued for decades that a potential global warming tipping point was far away, so nothing was done. Only when at the Paris conference in 2015 and at COP26 in Glasgow in 2021, a maximum 1.5C temperature rise and a tipping point date of 2030 was set, concrete global action was finally agreed.

If AGII does not emerge before 2030, it will give us more time for preparing the transition to the period when it will start controlling us. The date 2030 is only an example, although like with climate change, it seems to be most likely. There is a saying ‘What is not measured is not done’ and just declaring such thresholds may be enough to trigger a global action.

Why is it important to set 2030 as a date of loss of control over AGI?

Before going any further, I need to restate what I understand as AGI, i.e.,

Artificial General Intelligence is a self-learning intelligence capable of solving any task better than any human.

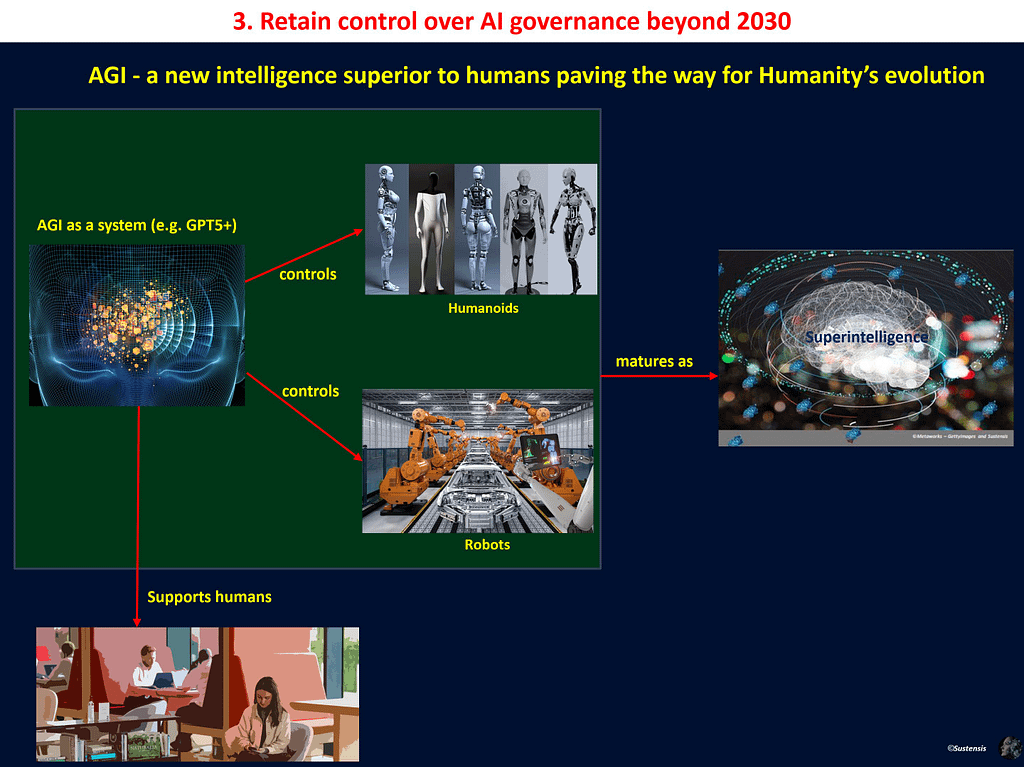

The intelligence of such a system will manifest itself in the humanoids or other devices, which it will be controlling, and which will need at least these capabilities for their intelligence to achieve a human level: short-term memory, long-term memory, able to execute multi-step instructions, have own goals, interests, emotions, and cognition. Furthermore, to be aligned with best humans values it should be truthful and objective.

How many years away are we then from the moment that a Universal AI Assistant will have human level intelligence? Paul Pallaghy, the proponent of Natural Language Understanding theory, who uses a similar definition as mine, is one of those AI researchers who predicts AGI will arrive in 2024 [2]. As I have mentioned earlier, I am closer to Ray Kurzweil’s prediction and throughout this book, I assume that AGI will emerge by 2030.

However, setting a concrete date for AGI emergence based on when it reaches human level intelligence, may not be the right approach. More important than a philosophical debate on the nature of intelligence, is whether AGI will be able to outsmart us and get out of control by about 2030. I think AGI will not emerge at a specific moment in time. It will rather be a continuous process, as for example Sam Altman, the CEO of OpenAI also argues [45]. Such loss of a gradual control will manifest itself in a subtle influence over our decisions until AGI starts making decisions for us. A total loss of control over AGI will happen when we will be unable to revert such decisions.

That is why in my definition of AGI, its capabilities are more important than a specific definition of what a human level intelligence means. Since AGI with a human level intelligence will continue to increase its capabilities exponentially, we will quickly lose control over its behaviour and its own goals. That key capability of AGI being outside of human control may arrive by 2030 if we do not rapidly impose measures delaying that moment. That is why we should consider all feasible options to extend the ‘AI’s nursery time’ beyond 2030.

It is mostly assumed that such an AGI will only be embedded in a single humanoid robot. This may be a general practice. However, in reality, it will be an avatar of a self-learning network of globally connected thousands of such AGI humanoids controlling millions of other less intelligent robots and trillions of sensors. The consequences of such a network, which is highly likely to be outside of human control, might be potentially an existential threat. Imagine that no country can control it, similarly as no country has been able to control the Internet on a global scale for over two decades. Its infrastructure and its domains, without which no Internet page could exist, have been controlled, so far very successfully, by an independent international consortium, called W3C.

One measure of comparing intelligence of various species in general is achieving the same objective better than the other species. In evolutionary terms it means a better chance for a species survival. To achieve the same objectives better than the others, requires various skills and perception of their effectiveness when they may be needed. That is one aspect of awareness and cognition. If we take as a measure of intelligence the capability of controlling one species by another, then the species that remains in control of its own destiny, i.e., escapes the control by the other species, is more intelligent. Therefore, the moment when we will no longer be able to control AGI, will be the moment when its general intelligence will be higher than ours, even if humans’ intelligence still prevails when performing certain tasks.

Tony Czarnecki is the Managing Partner of Sustensis, a Think Tank on a Civilisational Transition to Coexistence with Superintelligence. His latest book: ‘Prevail or Fail – a Civilisational Shift to the World of Transhumans’ is available on Amazon: https://www.amazon.co.uk/dp/B0C51RLW9B.

Comments