Making the first steps in the evolution of the human species

London, 17/6/2023

(This is an extract from the book : Prevail or Fail – a Civilisational Shift to the World of Transhumans’ by Tony Czarnecki)

By now you may already be convinced that AI is indeed an existential threat, far more severe than Climate Change and other man-made risks. AI is an existential threat of an entirely different magnitude, which can make our species extinct by a direct malevolent action. But it could also become our gateway to the world of unimaginable abundance and an evolution to a new species – Posthumans. The first step in that evolution is a civilisational shift to the world of Transhumans. In that shift, Transhuman Governors will play a pivotal role, since they will first of all control AI development from within, as discussed in the previous chapter, and then help all of us to make such a transition less chaotic and dangerous. Transhuman Governors will pay a dual role by controlling the maturing Superintelligence from within and also being a kind of a guinea pig for testing whether it will be possible to upload a whole human mind into a digital structure. They would also pave the way for millions and next century for billions of humans making that huge evolutionary step to become Posthumans.

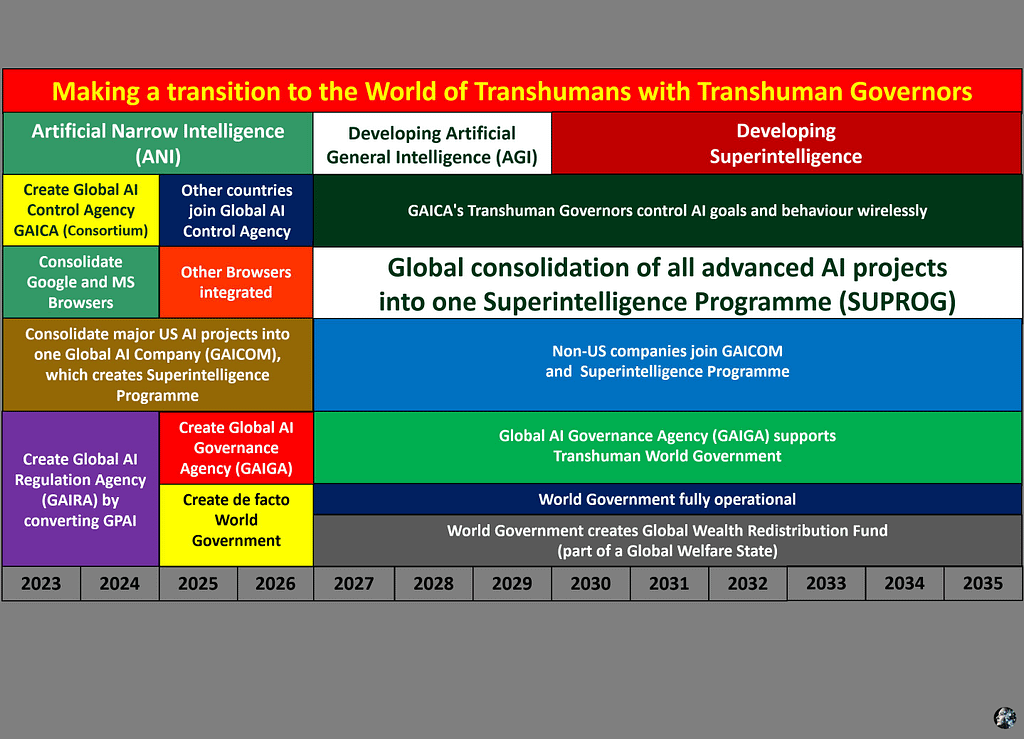

But Transhumans will be able to evolve with the maturing AGI and later on Superintelligence only with a global organizational, political, and material support. I have produced a detailed Civilisational Transition Schedule in Chapter 4 of Part 1. The schedule below is a summary of the main steps that need to be taken over the next 10 – 15 years. All of the organizations and institutions such as GAICA have been described earlier. So, here I will present them specifically in the context of role Transhuman Governors will play in that transition.

I start with the current period of Artificial Narrow Intelligence (ANI). We have already entered unknowingly the period, which I call the “Transition to Coexistence with Superintelligence”. In practice, we have about one decade to put in place at least the main safeguards to control the Superintelligence’s capabilities, to protect us as a species and develop it as a friendly Superintelligence, which will become our partner. One of the key preconditions for such a transition to be successful, is the creation of a supranational powerful organization that would be acting on behalf of all of us, as a planetary civilization (considering that the UN cannot realistically play that role). We must accept that the world will probably not act as a single unified civilisation, at least not immediately. Since we must act now, the option is to count on the most advanced international organization, which would initially act on behalf of the whole world, although it would only include some countries. That’s why I call it ‘a de facto World Government’.

All the institutions mentioned in this Plan have already been described. What this chapter will cover is how a decision-making process, especially in politics, will be affected by the emergence of Transhuman Governors, and ultimately by the Transhuman Government.

The transition to the next stage – the development of AGI will be fuzzy, and we may notice that AGI is already here by a chance discovery. Similarly, the transition to the final stage of Developing Superintelligence will be progressive and mostly done by AGI itself.

We have started the most uncertain period in the existence of the humankind. You can make your own judgment whether this is an exaggeration or an understatement.

Tony Czarnecki is the Managing Partner of Sustensis, a Think Tank on Civilisational Transition to Coexistence with Superintelligence. His latest book: ‘Prevail or Fail – a Civilisational Shift to the World of Transhumans’ is available on Amazon: https://www.amazon.co.uk/dp/B0C51RLW9B.