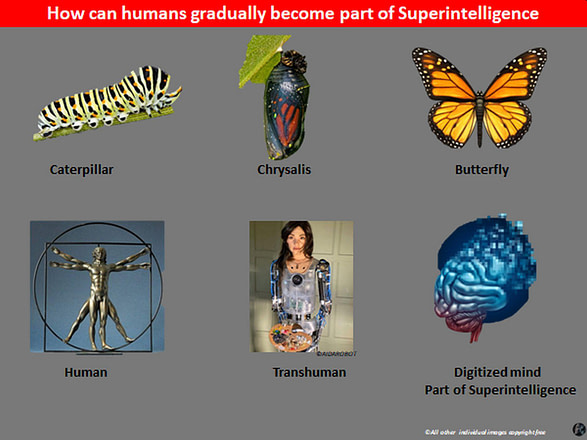

We are the only species, which may be able to control its own evolution in a desired direction. But we need to do it in stages. At present we are at a caterpillar stage. Our goal must be to control our evolution to the next chrysalis stage, when some of us become Transhumans, and then to the butterfly stage when most humans will morph into Superintelligence.

If we do nothing, our species may simply become extinct within this, or the next, century, as the consequence of a dozen existential risks. If we act together as a planetary civilization, we may minimize those risks. However, even if we manage to do that, the course of our evolution has already been set. We can only hope to evolve in a controlled way into a new, digitized species, ultimately morphing with Superintelligence. I summarize my case below.

- The world has started to change in most areas at nearly an exponential pace. What once took a decade it can now be achieved in a year or two

- Apart from imminent man-made (anthropogenic) existential dangers for Humanity, such as biotechnology or nuclear war, which can happen at any time, the most imminent risk facing Humanity is Artificial Intelligence (AI).

- It is technology, which is the driving force behind the exponential pace of change, and in particular a superfast development of the AI’s capabilities. AI has already been delivering many benefits to us all, and in the next few decades it may create the world of unimaginable abundance. That is the side of AI we all want to hear about.

- However, AI, as many other technological breakthroughs, such as nuclear energy or biotechnology (especially genetics), has also become a risk, probably the greatest existential risk that humanity has ever faced.

- By 2030 we may already have an immature form of Artificial Intelligence before it reaches the Artificial General Intelligence (AGI), also called Superintelligence – the term used on this website. This is the type of AI, which will exceed humans in their capabilities and the speed for making intelligent decisions thousands of times faster, but only in some areas, being utterly incompetent in most others. In particular, AI’s self-learning and self-improvement capabilities, which are already available, may progressively lead to unwanted diffusion of the superintelligent skills from some specific domains into other, of which we may not be even aware. Therefore, any political or social changes have to be viewed from that perspective – we have just about a decade to remain in control of our own future.

- By about 2030-2035 Artificial Intelligence may reach the stage, when humans may no longer be able to fully control the goals of such an immature superintelligent AI, even by implementing most advanced control mechanisms. The most significant threat in this period is the emergence of a malicious Superintelligence, which may destroy us within a decade or so, after we have lost control over its development. This risk trumps out other existential risks, like climate change, because of its imminent arrival and in an extreme case, a potential total annihilation of the human species.

- By 2045-2050 Superintelligence may reach its mature stage, either becoming a benevolent or malevolent entity. If it becomes benevolent, by having inherited the best Universal Values of Humanity, it will help us control all other existential risks and gradually create the world of abundance. If it becomes malevolent, it may eradicate all humans. At around that time, Superintelligence may achieve the so-called Technological Singularity. That means it will be able to re-develop itself exponentially, being millions of times more intelligent and capable than the entire Humanity, and quite probably becoming a conscious entity

- Whatever happens, humans will either become extinct because of existential risks, such as Superintelligence, or evolve into a new species. It is impossible to stop the evolution.

- If Superintelligence emerges by the mid of this century, then we may either become extinct within a very short period or will gradually morph with it by the end of the next century.

- If in the next few decades some existential events do happen, especially if they are combined, like pandemic, global warming and a global nuclear war, then our civilisation and potentially the human species may be extinct at once. Alternatively, a new civilisation may be built on the remnants of this civilisation, reaching the current technological level within a century and facing similar existential risks as we do now. That cycle may continue even for a few centuries, in which case the human species’ extinction may be delayed, or we will morph into a new species

- Therefore, Humanity has entered the period of an existential threat, when it can annihilate itself as a species

- We can no longer stop the development of Superintelligence, but we still have the control over its mature form, in particular whether it becomes benevolent (friendly) or malevolent (malicious)

If you broadly accept these observations but disagree with the pace of change, believing for example that it may take one or two centuries to develop Superintelligence then you are probably in the vast majority of people who think so. However, for naysayers here are some reminders: In September 1933, the eminent physicist Ernest Rutherford gave a speech, in which he said that “anyone who predicts energy could be derived from the transformation of the atom is talking moonshine”. The very next day the Hungarian scientist Leo Szilard, while walking in a park, worked out conceptually how a nuclear chain reaction can be used to build a nuclear bomb or a nuclear power station. There are dozens of similar most recent examples where top specialists predicted that an invention in their specialist domain would be many years away. However, all of these inventions have already been achieved either fully or partially (no. of years in brackets counting from 2016), such as: AlphaGO (10 years earlier), autonomous vehicle (8 year earlier), quantum computing (10-15 years earlier), quantum encryption (10 years earlier).

If you are still unconvinced, then read the some of the articles on this website you may have missed or books by authors such as Max Tegmark “Life 3.0”. I would welcome to read your arguments whether you agree or disagree (simply make a comment on this page). After all, the purpose of this website is to add suggestions to a common melting pot of ideas, to gain a better understanding about the way we can avoid extinction at least in the next few centuries.

Tony Czarnecki

Sustensis