Let us look at how we could avoid lasting damage to our civilisation in the next 10 years, or so, which may potentially be caused by creating a malicious Superintelligence. As mentioned earlier on this site, there is a high probability that this decade (2020-30), which I call the period of Immature Superintelligence, might be the most dangerous in the human history. In that period, there may be many dangerous events created by Superpowers and other nations, e.g. Russia’s attempts to take control over Ukraine, Moldova or the Baltics states, skirmishes in the China Sea over the artificial islands, and a real war about Taiwan, India-Pakistan war, Iran-Israel’s potential nuclear war, and many other local wars. These risks, although not existential on their own, when combined with other risks, such as a catastrophic global warming, pandemics and huge migrations due to draught, could become existential. However, the real danger for Humanity may come from losing the control over the AI as described in an earlier section. According to top AI scientists, such as Stuart Russell’s assertion in his ‘Human Compatible’ book, we must maintain an absolute global control over the most advanced AI agents latest by 2030.

Stuart Russell, Nick Bostrom and other AI scientists talk about losing control over AI capability, as it gradually matures into a Superintelligence. That is indeed vital. Most of such discussion focuses on losing control over so called ‘narrow’ AI, has concentrated so far on controlling individual, highly sophisticated robots, which can indeed inflict serious damage in a wider environment. However, their malicious action is far less dangerous for the human civilization, than an existential danger posed by a malicious AI system, which may have a full control over many AI agents and some humans. That imperfect Immature Superintelligence will have in some domains (but not all) the intelligence far exceeding that of humans. In principle, there could be more than one such Immature Superintelligence system operating at the same time, at least in the early stages of its development. Since such an advanced system can be used by its owner (controller) for its own, potentially malicious aims, such as controlling the world, it is almost obvious that we are entering a period where the Superpowers, or even some very rich individuals, may be tempted to overpower other such sophisticated AI systems, to gain control over the whole world. There are two questions here:

- Can a certain Superpower teach its version of Superintelligence to fight its competitors and deliver a supreme control of the whole world to its owner? I believe it can.

- Can such a Supremacist Superpower, after having conquered all its challengers and becoming an absolute ruler over all humans, also control its own, still Immature, Superintelligence. My answer to this question is – very unlikely.

A Superpower (let’s call it Supremacists) will face a dilemma. It is the possibility that the control of an Immature Superintelligence by a single Superpower, which might allow it to rule over the entire planet, although possible, may create the final outcome much worse for the aggressor, as well as, unfortunately, for the whole Humanity. This is the dilemma that some Superpowers may be pondering on right now, which is a well-known problem in the game theory known as the ‘prisoner’s dilemma’.

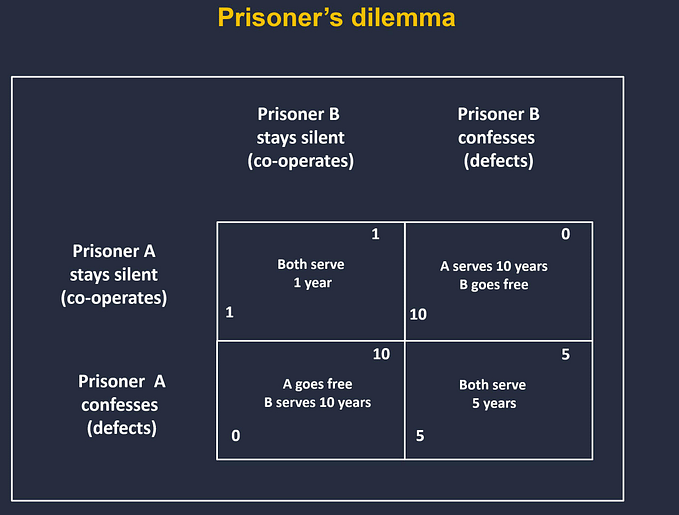

The prisoner’s dilemma has its roots in the game theory, mathematically best described by Albert W. Tucker and John Nash. It was originally developed for economics but has been deployed for even a more profound use in the geopolitical strategy, especially during the Cold War era. In the original concept of prisoner’s dilemma, two prisoners, suspected of armed robbery, are taken into a custody. Police cannot prove they had guns; they only have evidence of the stolen goods, for which they can be kept in prison for 1 year. In order to get the evidence that they threatened to use a gun, which would keep them in prison for 10 years, they need one of them to confess that. Therefore, they decide to offer the prisoners a reduction in prison sentence to 5 years, if they both confess to having guns during the robbery. If one of them denounces the other, he would be free and the other would get 10 years. This is shown in the following diagram (the numbers represent years in jail):

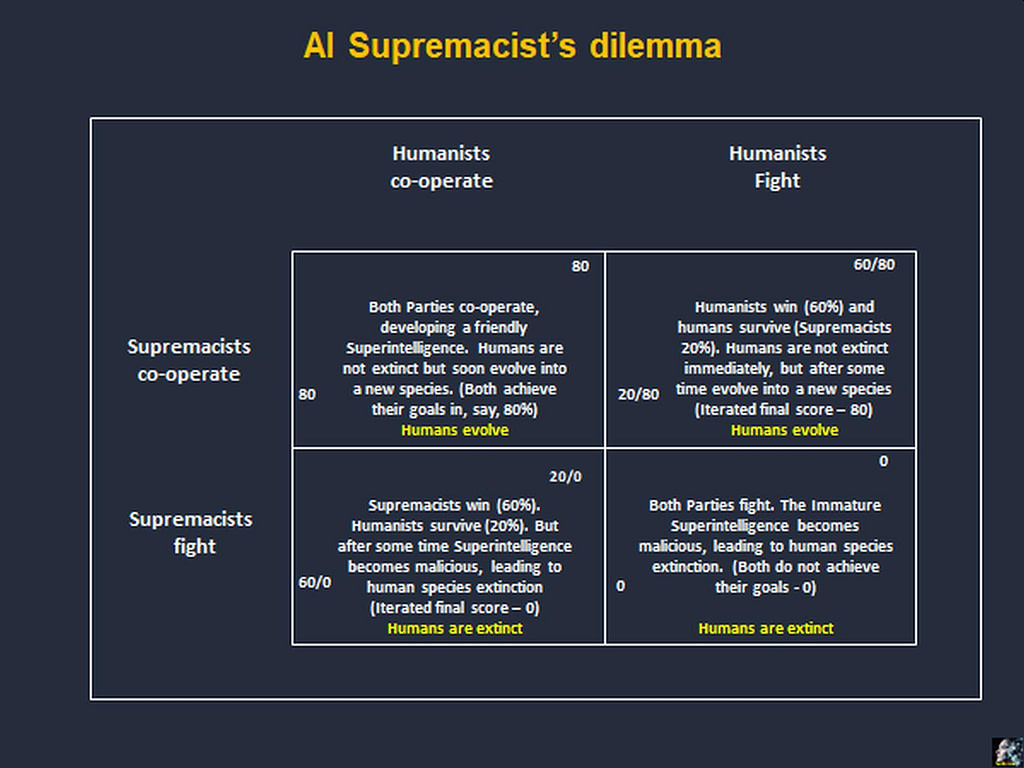

I have developed below a variant of the prisoner’s dilemma in relation to Superintelligence, which I call the AI Supremacist’s Dilemma, with the same rules and assumptions. So, like in a typical prisoner’s dilemma, the opposing parties choose to protect themselves at the expense of the other participant. The result is that both participants may find themselves in a worse situation than if they had cooperated with each other in reaching a decision.

You may think that the world still has a lot of time to prepare, before any of the Superpowers is capable of blackmailing the world with its own superior Superintelligence. I’m afraid this is a wishful thinking. Such a danger is here and now and I provide further evidence in the subsequent sections on this website. Suffice to say, that change is really happening at a nearly exponential pace in most domains of human activity. Such a Supremacist Superpower may be tempted to act sooner than later, since it will be more difficult to achieve a global supremacy in AI towards the end of this decade, when AI becomes more sophisticated and also dispersed to more global players, including very wealthy individuals, the subject, which I consider further on.

When applying the prisoner’s dilemma to Superintelligence, I consider a scenario, in which there are two Superpowers: Supremacists and Humanists – representing the rest of the world. Let’s say that the Supremacists create Superintelligence that is equal to that of Humanists’. The Supremacists’ objective is to rule the world according to their own values, and impose the will of their nation over the rest of the world. They plan to use Superintelligence to help them achieve that goal, while still remaining its master. In order to that they must upload its Superintelligence with certain goals (motivators) based on the very top of Supremacist’s values. One such top value could be, for example, making the Supremacist’s nation, race or religion superior to other nations. If they decide to do that, they would violate the so-called Asimov’s first law for robots – do no harm to humans, now largely superseded by the Asilomar principles.

The consequence of that might be that such a Superintelligence would indeed initially act in a malicious way in the interest of that Superpower only. However, at some stage it might turn against its master, since an evil Superintelligence will not be able to perfectly differentiate between a friend and a foe, or between evil and good. This is especially likely, since in this decade we will only have an Immature Superintelligence, which will be prone to some grave errors. In the end, if such a scenario turns out to become a reality, nobody will be able to control Superintelligence, which is most likely to be an evil entity. Such an evil Superintelligence, may very quickly decide to make us extinct for many of its own reasons. Will the Supremacists be prepared to take such a risk? Will they do it, knowing that there is a high probability their Superintelligence built on the key value of supremacy with its key goal being the subjugation of all other people who are not the Supremacist’s citizens, may in time become evil, annihilating the Supremacist nation and the entire human species?

On the other hand, Supremacists may consider co-operating with the rest of the world (the Humanists), to mutually develop a friendly Superintelligence, which may be immensely beneficial to all. Instead of fighting each other, Supremacists and Humanists could jointly work with a mature Superintelligence, to evolve together over a longer period into a new, post-human species, which will inherit the values promoted by the future Human Federation. Therefore, such a Supremacist will face a dilemma that can be set as four possible scenarios:

- Scenario 1. Supremacists decide to cooperate with Humanists, after Humanists convince Supremacists to work together, so that in the long-term humans will gradually evolve merging with Superintelligence into a new species, which will inherit the best values of Humanity. Both Parties accept that one of their goals to survive in a biological form will not be met. Supremacists will not achieve their objective to rule the world according to their system of values. Thus, each party does not achieve their objectives fully, but say in 80%. The result: 80, 80.

- Scenario 2: Supremacists fight their corner and win. They become the supreme world power, imposing their values over all humans. However, after some time, the Immature Superintelligence becomes malicious and all humans become extinct. Supremacists achieve their objective (60%), but in the end, they become extinct with the rest of humans (after iteration they fail to achive their objective, hence 0%). Humanists have lost, but they survive for some time, until the Immature Superintelligence wipes them off as well, (20% of their objectives achieved, 0% after iteration). The overall result: 60/0, 20/0.

- Scenario 3: Supremacists fight, but lose. However, they survive for some time in a biological form (20% of their objectives achieved). Humanists did not want the cyber war, but have won. Although the evolution via merger with Superintelligence has been delayed, they achieve their objective, say at 60%. The final result: 20/80, 60/80.

- Scenario 4: Finally, Supremacists fight but neither they nor the Humanists win. During the fight the Immature Superintelligence became malicious, eliminating all humans. Neither of the Parties achieves their objectives. The result: 0, 0.

The scenarios are represented in the table below:

The numbers in the AI control dilemma are of course only exemplary, illustrating the point. I am almost certain that most Superpowers already play that game trying to find a solution that would be significantly better for them, than the opposing power. However, after the world has experienced first-hand some severe consequences of Cyber-attacks for several years, it will become obvious to all players on the geopolitical stage that there could be no outright winner in an all-out War of Superintelligences. It should also become clear in any war games that no Superpower can realistically expect to gain supreme advantage over the rest of the world, by developing and controlling its ‘own’ Superintelligence, which might only destroy the Superpower’s adversaries. Additionally, in such context, even any potential advantage gained in conventional or local nuclear wars would mean very little in the overall strategic position of a given Superpower. Moreover, any ‘hot’ global or local war makes no strategic sense and instead, as has been mentioned before, the only hope for Humanity is to ‘nurture’ Superintelligence in accordance with the best values of Humanity.

However, neither the prisoner’s dilemma, nor the AI control dilemma would work with psychopaths. If some mad scientists, dictators, very wealthy individuals, or Transhumans want to inflict damage on Humanity, even if they themselves perish in the result of their action, e.g. in the style of Stanley Kubrick’s ‘Dr Strangelove’, then this scenario of AI Supremacist’s’ dilemma will not work. Such psychopaths may literally destroy Humanity. Therefore, as with conventional or nuclear wars (e.g. North Korea), the world may have to pre-empt such potential malicious action by destroying dangerous AI facilities, when it is still capable of doing so. This may be a lesser risk than letting psychopaths do severe damage to the world.

When the Superpowers realize within this decade, that there can be no winners, but Superintelligence if an all-out Cyber-War is fought, I can offer you some additional dose of optimism in this quite a positive scenario. I believe, we can expect in the next 10-15 years some unimaginable breakthroughs in the planetary co-operation, for example:

- Stalemate in achieving global supremacy may lead to opening gambits, i.e. giving up previously held advantage as a qui pro quo. An example is the Intermediate-Range Nuclear Forces (INF) Treaty signed in 1987 between the Soviet Union and the USA, which was recently recalled by Russia and USA/NATO. It is quite likely this may be ‘repaired’ by a new treaty with better controls and even steeper reduction of nuclear arsenal

- AI Superpowers will end Cyber Wars, and will focus instead on developing a single, friendly Superintelligence

- European Federation is very likely to be set up by the end of this decade by creating membership zones almost seamlessly, and becoming the most important organisation in the world. This could be the beginning of the future Human Federation.

- NATO may be fused with the EF by the end of the decade

- Should the European Federation be not set up by the end of the decade, it might be NATO by expanding its scope of activities like including the Cyber-war prevention, economy, health and infrastructure domains

- Russia may join one of the zones of the European Federation (EF) – the result of an economic decline in the post-Putin era. This may become a pivotal moment in the federalization of the world. It may paradoxically happen earlier than the USA joining the EF, as the ‘nursery’ of the Human Federation, although the order in which both countries would join the EF is less important

- UN establishes a majority voting system in the Security Council but that may be irrelevant if Russia, (and possibly China, although it would probably join last) would have already joined the EF and NATO.

I know that this looks like an almost idealistic scenario enabling democracy to be rebuilt and spread throughout the world much more easily and more effectively. However, I would rather take a more realistic approach and assume that the new democracy system will be born in pain and at the time of severe distress, or perhaps even an apocalyptic danger. That may also stem from the rising capabilities of Immature Superintelligence. People are often divided by ‘Us’ vs ‘Them’ perception. Perhaps such a threat from more and more capable Superintelligence could unite Humanity under ‘Us’ vs ‘It’ agenda, ‘It’ being the Superintelligence.

Tony Czarnecki, Sustensis

Comments